The views expressed in this article are the author’s alone and do not represent those of the U.S. Navy, the Department of Defense or the State of North Dakota.

This article proceeds on the supposition that a decades-long unquestioned orthodoxy surrounding digitization and automation in pursuit of speed and efficiency has undermined national resilience and created potential existential security vulnerabilities. Such loss of resilience is not fated but a choice emanating from an identifiable military philosophy of armed conflict that originated in the U.S. military and later spread to government and industry.

Understanding that the roots of our challenge are philosophical, and that humans have a choice to restore resilience and trust, allows us to identify solutions, however radical they may seem, in the existing speed-efficiency paradigm. That solution includes shaping, narrowing and maybe limiting where we allow digitization and AI to come together, but also includes a radical reemphasis on human agency, human education, training, skills and abilities, to include the substantial elevation of human control in existing human-machine teams. Stuart Russell, a world-leading AI expert, when pondering the coexistence of humans and AI, concluded that a reemphasis on the human factor was critical, and that only human cultural change, akin to “ancient Sparta’s military ethos,” could preserve human control and agency in the Age of AI.i

> War-Game Epiphany

Headlines worldwide now proclaim news of accelerating AI capabilities. Task forces are being convened from the White House to the Pentagon to Wall Street to better understand the threats and opportunities this presents.ii Perhaps AI’s most important aspect, potentially existential to national security and critical state and local systems, is the convergence of AI and cybercrime and cyberconflict. But there is a fundamental problem of epic proportions that is being ignored, perhaps purposely, because the solution set might be so radical.

The problem is that, quite simply, there is no technical solution set that alone can assure human control of AI and its increasingly integrated technologies when these are used to power cybercrime and cyberconflict. The radical solution set must include a profound rethinking of human knowledge, skills and abilities, as well as the preservation of tools to assure ultimate human control of digital systems in the face of hacked AI algorithms. The solution set may have to include shaping, narrowing or limiting the reach of AI and digitization. How did I come to this radical insight? And why hasn’t such a solution, costly and neo-Luddite as it might seem, been adopted already?

A decade ago, I saw evidence that a techno-philosophy was gaining unquestioned adherents in domains where hacked AI vulnerability could emerge with portentous and unpredictable consequences. I was attending a war-game exercise sponsored by the Office of Defense, Research and Engineering in the Office of the Secretary of Defense (OSD) that examined the future of technology, conflict and war.iii About the same time, I was asked to present at the Geneva Convention on Certain Conventional Weapons conference on lethal autonomous weapons systems in the spring of 2014.iv Based on the war games and discourse at Geneva, attended by more than 100 ambassadors, it became clear that three transformative technologies would challenge the Department of Defense (DOD) to its core: AI-powered autonomous killing machines; a next generation of ever-more ubiquitous digital, AI-influenced communication networks; and, as anticipated in science fiction, enormous pressure would build for increased human-digital machine integration.

[T]here is no technical solution set that alone can assure human control of AI and its increasingly integrated technologies when these are used to power cybercrime and cyberconflict.

But I also took away something else from the games: The sense that the momentumvi of the DOD’s R&D/ acquisition system was propelling the U.S. military toward a strategic conundrum of historic proportions, that the pursuit of speed and efficiency would create brittleness and undermine trust and resilience that could extend well into the future and become almost irreversible. The three major transformative technologies, mentioned above, progressively replace human decision-making, knowledge, skill and physical abilities with intelligent digital devices (IDDs) that are vulnerable to cyberattack.vi In a pre-cyber conflict age dominated by the U.S., such a replacement of the human with machine might produce all positives: reduced risk to Americans, faster flow of information, higher-performing battlefield units, more efficient state infrastructure and perhaps cost savings. But in the face of rising cyber powers, this proliferation of IDDs in communication networks, robotics and human-decision aides and enhancements may place our national defense and state/local security at risk.vii

Rapid digitization and AI emergence in confluence with cyberwar argues for what may seem counterintuitive but has historical precedent. During this period of uncertainty, DOD, state governments and key infrastructure corporations should slow deployment of digital-AI programs that displace human skill and decision-making and should slow the retirement of mature, stand-alone technologies. In parallel, DOD and the states should reestablish selective training programs to preserve or regain critical human-centric knowledge, skills and abilities.

But can the U.S. military change this trajectory? Yes, but first both political and military decision-makers must understand that the trajectory of automation and AI application is a human choice, not fated nor inevitable. Yet we are up against a growing inertia of blind acceptance. How did we get here? And what are the origins of this particular philosophy?

> Over-Automation & Privileging Speed

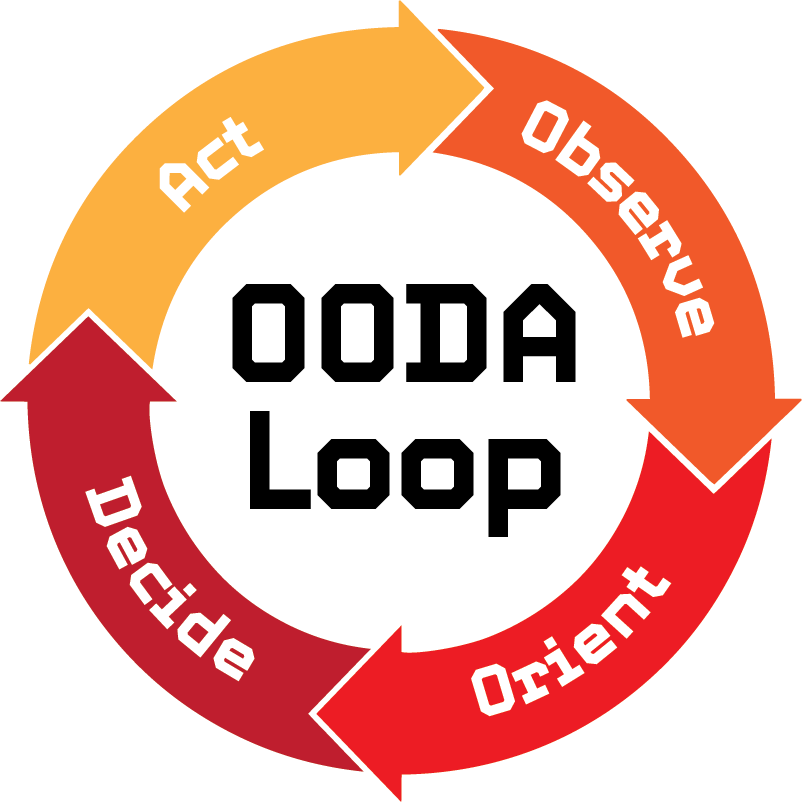

So, what shaped human thinking that we have privileged digital speed and efficiency at the potential cost of trust and resilience? A philosophy so doctrinaire that it was embedded as an assumption in doctrine and even war games where assumptions should have been tested. The futuristic four war games I attended examined the evolution toward unmanned systems, ever larger information networks and electronic human-machine integration. It was often argued that such electronic-based systems could get inside an enemy’s decision cycle and give us an advantage in what is known as the OODA (Observe, Orient, Decide, Act) Loop. And, I was persuaded: Unmanned systems with AI processors could compute faster in many cases than a human; computer-enabled tactical electronic communications systems could transmit more data faster than the human voice or non-computerized communications; and soldiers aided by yet ever-more electronic and web-enabled devices could allow fewer, lesser trained humans to do more tasks faster than personnel without these devices. Many of these are already in the field, including handheld GPS linked to iPads reducing reliance on human navigation skills on land or sea, and computerized translation programs that, while convenient now, will ultimately reduce the incentive for soldiers to maintain natural human-language proficiency.

The OODA (Observe, Orient, Decide, Act) Loop was developed in the 1990s by USAF Colonel John Boyd as a military strategy focusing on agility (by facilitating rapid, effective reactions to high-stakes situations) to overcome an opponent’s raw power. The OODA Loop has also been applied successfully to business and industry, and more recently shown applicable to cybersecurity and cyberwarfare.

But where human action and decision-making are displaced by IDDs, new questions of security arise, now known as cybersecurity. Its close relative, cyberpower, enables an actor to use computer code to take control of, influence or degrade another actor’s IDDs or communications systems.viii Our country continues to proliferate hackable IDDs in an increasing number of systems based on the implicit assumption that the U.S. will maintain information dominance and thus a favorable cyber balance of cyberpower. The assumption underlines DOD’s race to build fleets of unmanned vehicles, build ever-more complex and netted electronic information systems, and deploy ever-more electronic decision aids to our sailors and soldiers.

But is it reasonable to assume that our increasingly automated and computerized systems are and will remain cybersecure, trustworthy and resilient?ix I think that several of these suppositions are or will very soon be in doubt for a simple reason: Unlike more traditional forms of physical power, cyberpower relationships can shift unpredictably and leave our nation in a condition of relative uncertainty. Thus our ability to predict, observe and react may be inadequate to maintain information dominance and cyber superiority. Why is this so?

> Uncertainty of Cyberpower

Cyberpower calculations are increasingly opaque, and as a result, determining which country is or will remain in the cyber lead is uncertain.x Unlike security calculations and arms races of the past, where counting tanks, battleships or ICBMs provided a rough measure of relative technological power, such calculations are more difficult if not impossible today. The addition of each new IDD to the already millions of such devices in the DOD inventory adds another conduit for cyberattack and contributes to rising complexity.xi Due to the proliferation of IDDs, we are on a trajectory towards the time when nearly all critical systems and weapons may be accessible and hackable by computer code. In this new electron web of machines, if one of our stronger cyber rivals gains a strategic computing advantage (perhaps a breakthrough in supercomputing or cryptography), the consequences could range from the tactical to the strategic across our netted systems and automated platforms to the detriment of soldiers who have become dependent on electronic devices.

And, there is a dawning revelation of the vulnerability of automated and remotely piloted vehicles. DOD’s Defense Advanced Research Projects Agency (DARPA) some years ago instituted the High-Assurance Cyber Military Systems program (HACMs) to provide better protections to the American drone fleet. Most recently, DARPA all but admitted it was struggling to keep up with the pace of AI evolution and began a series of workshops, AI Forward, in the summer of 2023, to bridge the fundamental gap between the AI industry and DOD.xii The U.S. Air Force Research Laboratory Chief Microelectronics Technology officer admitted that, “At a high level … our program offices and our contractors do not have good visibility into the electronics and designs that they’re actually delivering into the field … . If you don’t know what is in your system, how can I possibly trust it?”xiii

It is not just the scope that’s concerning but also the speed at which power can shift. Espionage and treachery have been historical realities since before the Trojan Horse. But with the growing reliance on IDDs, automation and networks, the costs of failure are accelerating, magnified and broadcast systemwide.

Edward Snowden released documents cataloguing National Security Agency (NSA) activities and did significant damage to U.S. national security, but the operating military forces were largely unaffected. What if critical electronic and automated systems were either hacked or compromised? With ever-more netted, automated military Supervisory Control and Data Acquisition (SCADA) systems, the ability of a hacking to disable ever greater segments of our infrastructure might be possible and happen rapidly—perhaps with little warning.xiv

In the recent past, it took several years to build battleships or nuclear submarines to change a naval balance of power, during which time we could see the shift in power coming, for it was hard for a potential adversary to hide the 50,000-ton behemoths. Conversely, today, with our growing reliance on automated machines, an opponent might gain a strategic cyber advantage with the changing loyalties of a single programmer or the breakthrough by a team of programmers producing powerful algorithms, all occurring with a minor physical footprint, perhaps in a non-descript office building, all in a relatively short period of time.

> Root Cause: OODA & Trust/Resilience

How did the frontline military become so dependent on automation and electronics? Short answer: In the 1970s and 80s, leading military thinkers and technologists privileged speed as a determinant on the tactical battlefield. Electronics and automation accelerated Colonel John Boyd’s OODA Loop, which made sense in the environment in which they developed their ideas. Given how the problem was defined then, automation with its ever-higher speeds of decision-making made good tactical sense, especially when pilots were independent and not networked.xv But a combination of group think among military leaders and growing momentum in the R&D/ acquisition system now propels us toward greater automation, lesser direct human control, even though the environment and conditions have changed..xvi

As mentioned previously, Boyd’s concept of “getting inside an enemy’s OODA Loop” gained a growing number of adherents across all the military services, in all platform communities, and eventually in the government and industry writ large.xvii Boyd was a fighter pilot who built on his experience in the cockpit and argued that the central objective of new systems was to speed up the decision cycle: to “Observe, Orient, Decide and ACT (shoot)” an enemy first. However, in the OODA Loop, the trustworthiness of the decider (the pilot) was not a factor. The number of American fighter pilots who became traitorous in the cockpit has been minimal, possibly none, after a century of air combat experience.

To be sure, there have been American and Allied combatants who turned traitorous on the battlefield. But in cases where enemies infiltrated or swayed a decision-maker, typically in the ground forces, the effects were localized. Such human hacking cannot, by the nature of human-analog functioning, ever become systemwide. Witness the isolated “green on blue” attacks (in which an Afghan soldier(s) attacks American troops) that periodically occurred in Afghanistan, which could not spread systemwide at the digital speed of light. In contrast, where there are IDDs run by ones and zeroes, the entire netted system becomes vulnerable, whether squadrons of Unmanned Aerial Vehicles (UAVs) or a division of soldiers and their electronic decision aids. A cyberattack could thus turn a system of UAVs against us or corrupt a platoon’s kit of electronic decision aids and produce strategic or tactical losses on multiple battlefields simultaneously.

Thus, the “more speed” orthodoxy has been turned on its head with the possibility of cyberattack and questions of trust and brittleness. With continued proliferation of IDDs—without keeping cyber superiority—the inevitable and unavoidable result will be increased surface area for cyber vulnerabilities, higher chance of cyber penetration and a reduced resilience. Is this orthodoxy on the wane? Recently, DOD adopted another OODA-Loop driven initiative, the Joint All Domain Command and Control (JADC2) to include integration with nuclear command and control systems.xviii Though this strategy document tweaks the phrasing ever so slightly to “Sense, Make Sense and ACT,” make no mistake, this is OODA-Loop philosophy arguing for faster systems integrated even with nuclear control systems.

To persuade a critical mass of military officers to question their preference for speed and automation requires an alternate philosophy, that I suggest is trust and resilience. Trust is well understood, but what are key features of resilience for combat units?

A cyberattack could thus turn a system of UAVs against us or corrupt a platoon’s kit of electronic decision aids and produce strategic or tactical losses on multiple battlefields simultaneously.

Resilience, the ability to sustain a cyberattack and then restore normal operations,xix is an especially important consideration in America’s cyber cadres. But maintaining resilience with frontline units, which navigate in dangerous waters or face a kinetic military environment, is different than maintaining resilience in relatively static civil systems and different than systems within safe borders, such as Fort Meade and NSA. In systems situated safely behind secure borders and walls, a momentary cyber breach, termed “zero day,” can be sustained typically without physical damage (STUXNET-like attack not withstanding). The coding problem will be corrected, and if data was lost, there typically exist backup locations for critical data.

But when a system is physically located in a potentially kinetic or environmentally dangerous military area, a zero day relating to navigational, electrical, propulsion and/or defensive systems may be unrecoverable. A cyberattack in conjunction with kinetic attack can result in irreparable harm that cannot be regained with a software patch or by accessing the backup data. Thus, viewed from this perspective, our growing dependence on millions of military IDDs, on growing numbers of unmanned and more highly automated systems in operating forces, in conjunction with the rise of both AI and cyber warfare, has changed the problem and requires new thinking. As well, there are potential major consequences of inaction, not just for the current generation but the next. As mentioned previously, technological systems have a well-documented tendency to ‘lock in’ the decisions and choices of the first generations of users. If we do not thoughtfully engage this question of AI, digitization, automation and cyber resilience now, the vulnerabilities may become endemic for our children.

> What should be done?

Ten years have lapsed since my first uneasy feelings at the war games. With the explosion in AI and advancement of cyber tools, the stakes are even higher now, as General Mark A. Milley, Chairman of the Joint Chiefs of Staff, opined in his farewell address: “The most strategically significant and fundamental change in the character of war is happening now, while the future is clouded in mist and uncertainty.”xx But Milley is not alone. The National Science Foundation has also sounded the alarm. The clouds of digital data and millions of robotic or automated machines may reach new levels of efficiency if guided by AI, but such a combination also poses a risk that “these [AI] systems can be brittle in the face of surprising situations, susceptible to manipulation or anti-machine strategies, and produce outputs that do not align with human expectations or truth or human values.”xxi

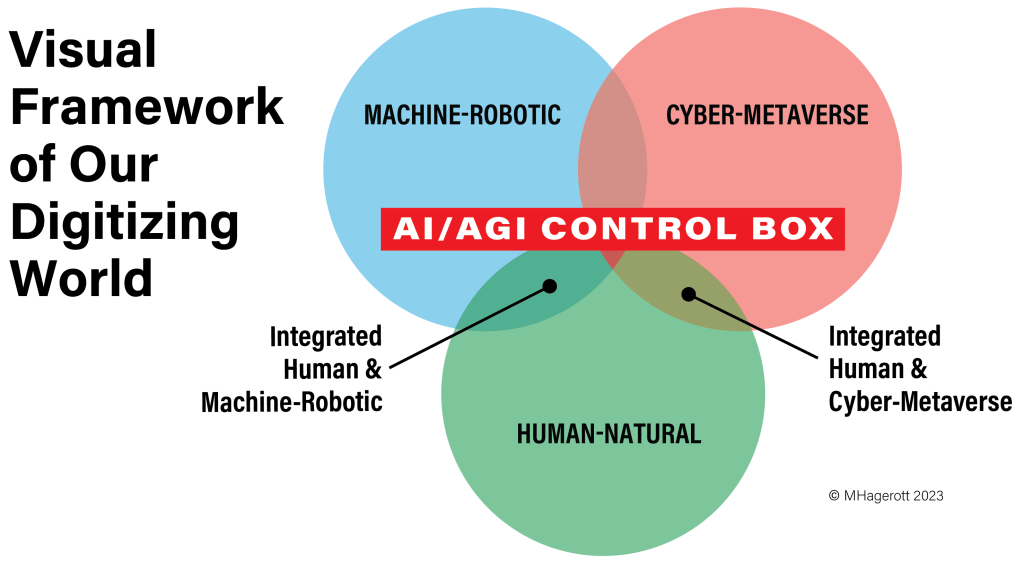

With digitization, there are now three interlocking realms of activity on our planet: (i) Human-Natural, (ii) Machine-Robotic (near or fully autonomous intelligent robots) and (iii) Cyber-Metaverse (non-tactile internet including the Cloud). Increasingly, AI (and potentially artificial general intelligence [AGI]) is taking control of realms (ii) and (iii) and the convergence of all three, as well as threatening to usurp most of the Human-Natural realm. This graphic illustrates the challenge and urgency of limiting the reach of AI to reduce cyberattacks and long-term human deskilling.

If deliberate policy does not slow the trajectory of R&D/acquisition, the culturally privileged OODA philosophy will drive even wider proliferation of AI and IDDs in new systems,xxii resulting in further automation and human-deskilling of combatants and a loss in resiliency.

There are several courses of action to defend against rising cyber uncertainty and the unique challenges of military and civilian resilience. Of course, we will never disinvent the digital computer nor eliminate our many networks, and we shouldn’t try. In cases where speed is critical, we must risk deploying highly automated systems, even with the attendant cyber risk. DOD is already pursuing the most technically sophisticated courses of action: investing in the latest cyber-secure systems,xxiii contracting for the best software provided by the best cybersecurity firms; R&D to create near unhackable IDDs or near unbreakable cryptography (if a breakthrough in quantum computing doesn’t first break current encryption); secure, government-controlled production facilities; development of unhackable inertial navigation systems; hiring more and better qualified computer network defense experts;xxiv incorporate the most rigorous antitamper technologies in the growing fleet of unmanned systems. But developing computer code to check on computer code is a costly, and some say an impossible task, a problem identified by Alan Turing and now known as the Halting Problem—when does the computer know it can halt searching for a virus? For these reasons we must carefully shape, narrow or limit the application of more powerful and vulnerable AI to existing and future digital systems.

A cyberattack could thus turn a system of UAVs against us or corrupt a platoon’s kit of electronic decision aids and produce strategic or tactical losses on multiple battlefields simultaneously.

But there is another way to increase cybersecurity, to reduce the surface area of cyberattack, increase resilience, detect failures and provide unhackable code that will carry out orders uncorrupted: Keep more humans in the loop, making decisions and using their natural human skills to control machines and communicate when the automated systems show signs of failure or corruption.

What does rebuilding human-machine resilience entail? We should maintain more legacy and humancentric systems, as well as modify and reform officer and enlisted education to ensure our operators can navigate, fight, command and control (C2) in a less-netted and computer-aided environment. This includes training to restore human agency in the key functions of move-shoot-communicate, to include both maritime and land navigation; non-computer aided communication; non-networked, human-directed warfighting capabilities; human language proficiency and critical thinking—before these KSAs (Knowledge, Skills and Abilities) have been totally supplanted by AI-robotics or dangerously atrophied. Rebalancing the roles of AI-powered digital machines and ensuring the vitality of the human member of the human-machine team might cost more but could preserve human agency and create a more resilient human-machine system, reducing the surface area for cyberattacks and the possibility of cyber-silent failures, as well as provide the ultimate unhackable code: natural human cognitive and physical processes.

The following are military areas of concern, where the OODA-Loop fueled rush to replace-the-human with faster, increasingly autonomous systems has opened the door for digital and AI-related cyberattack.

> Education to Rebuild Human Skills

As stated before, we cannot unplug the DOD from all AI and digital machines. Rather, the goal is to consciously decide how to shape AI and automation to ensure a more trustworthy resilient human-machine team in cyber conflict or war. Preserving or invigorating the human element in the human-machine team will bring advantages, including reduced surface area of possible cyber penetration; increased resilience; and less likely silent failures, since more trained and skilled operators will be available to perceive when equipment performance begins to degrade, a gap which was demonstrated in the STUXNET cyberattack.xxv To this end, combat training should include conditions of degraded communications, in which control of forces would have to be conducted solely with human-readable and human-audible transmissions, similar to the EMCON strategies during the Cold War.xxvi

Why does this convey a possible advantage? Humans remain all but impervious to cyberattack. To disable a human and his/her human-operated mechanical system typically requires the physical destruction of such a system, person or platform. Thus, the continued manual human presence in key military processes may in fact increase the resilience of our systems.xxvii

To its credit, the Army officer training programs continue to require proficiency at land navigation, unaided by GPS or any digital device. While costly, the sea services may need to consider a reinvestment in the older radio navigation systems, which were all but disestablished in the past decade, and human-centric celestial navigation should be preserved.xxviii Easy access to GPS position data now results in deskilling human operators,xxix who grow yet more reliant on electronic systems in a reinforcing cycle. A recently released report concerning the grounding and destruction of the USS Guardian explicitly notes the crew’s overreliance on GPS data and digital charts, and their failure to use physical/optical verification (the eyes of the deck officers) to avoid shoal water.xxx To their credit, the U.S. Naval Academy in Annapolis reversed a 15-year-old decision to eliminate celestial navigation, and now the entire student-body receives basic instruction and training.

DOD should continue to require new officer candidates to be electronic-enhancement free, and DOD should continue to avoid the implantation of any electronic devices in military personnel to ensure that natural senses remain acute.xxxi On the battlefield, DOD should proceed carefully in providing soldiers or sailors with external electronic sensory or decision aids, which may in the short term provide a memory boost, facial recognition, language translation capability—all desirable tools in a cyber-secure environment. But such technologies will inevitably lead to the deskilling of humans and increasing cyber vulnerability while reducing a unit’s resilience. The DOD is aware of the growing inventory of ‘wearable sensor tech’ and should be applauded for their recent efforts to study this issue.xxxii

And not just the military educational institutions have a responsibility, but also the entire national K-12 system. The threat of society-wide disinformation and overreliance on the internet and now AI is growing. A key counterstrategy to preserve trust and resilience in our young military ranks is a reemphasis on critical thinking both in K-12 and collegiate programs, including ROTC and the service academies. To that end, there must be an expanded national effort, from kindergarten to the doctoral level (Cyber PK-20), to impart a basic understanding of digitization, cyber hacking and AI, tantamount to basic math and language literacy efforts of the 19th century. Similarly, federal and state governments should consider a new Digital Service Academy and even a national Digital-Cyber Land Grant Act to invigorate digital and cyber education at the collegiate level.xxxiii

> Shaping Deployment of AI & Automation

Our culture—at DOD and throughout America— privileges the new and technical. But we misinterpret our history if we think that previous successful technological revolutions proceeded without abatement or delay. Many technical revolutions proceeded in fits and starts, as new technology was tested, found wanting and then reapplied with greater success. In the past, many experts were convinced, as examples, that the neutron bomb and nuclear-powered aircraft were wonderful ideas. But these and other technological applications proved unwise, although submarine and large-ship nuclear propulsion and civilian power generation were widely adopted to great benefit. Going further back, speed and labor saving were not the unquestioned policy drivers as today.

In the 19th century, the purchase and employment of speedy steam ships was delayed in favor of more resilient, reliable, steam-sail hybrids.xxxiv In the nuclear-power revolution of the mid-20th century, Admiral Hyman G. Rickover purposely chose a relatively costly, highly trained human-centric organizational approach over a labor-saving, more computer dependent system of reactor operations, display and control—a choice that the Chernobyl nuclear meltdown incident confirmed as profoundly wise.

A strategy of slowing or narrowing the deployment of AI and automation has now gained national support. Most recently, hundreds of scientists, engineers and entrepreneurs called for a six-month delay in the further fielding of advanced AI algorithms, until the larger implications of such technology could be studied.xxxv But the issue goes deeper and further than just the latest general AI algorithms. Some potential use cases are described below.

Poseidon

Russia’s Poseidon (also known as Status-6 and, in NATO, as Kanyon) is a large, intercontinental, drone torpedo, which is nuclear-powered and nuclear-armed. Poseidon can travel autonomously undersea up to 6,200 miles to attack enemy coastal cities. Illustration / Covert Shores

Large Ship Navigation & Russian Drone Nuke Sub

The U.S. Navy is under strict human control and exhibits great resilience in contrast to the now desperate Russian attempt at dangerous and reckless automation. For several decades, debate has swirled around the possible Soviet-Russia development of a ‘dead hand,’ or highly automated nuclear retaliatory Doomsday Machine. This was never confirmed until recently: The Russians have developed a nuclear-armed drone, which is an unmanned submarine capable of cruising several thousand miles at high speed with the mission to destroy coastal cities. The euphemistic characterization of this as a “torpedo” stretches any accepted use of

the term, as torpedoes were always of limited range and tactical. This is an example of loss of human agency and profound loss in resilience: Putting a nuclear weapon on a submerged drone using AI and satellite navigation, both of which abandon human control and open the path for AI poisoning, hacking or even self-hallucination.xxxvi

Might the U.S. Navy eventually over-automate in a rush to keep pace with other rising powers? In the high-speed missile battlefield, OODA-Loop speed will most likely remain a necessity. Years ago, the Navy committed to high-end automation as a solution to missile attacks and built the AEGIS self-defense system, which allowed a robotic, lower-level AI to take control of a ship’s weapons. But humans were present, as I can attest as a former combat systems officer on an AEGIS ship. I observed the low-level AI computer’s independent action, and I was able to turn the analog key to disengage the firing signal, thereby shutting down the robotic system.

Yet there are theorists who argue the benefits to depopulating entire ships, and indeed the U.S. Navy is on track to develop a fleet of unmanned ships and submarines. But as both AI- and cyber-hacking tools grow in sophistication, does the cost-benefit calculation of an increasingly robotic, AI-powered fleet begin to change? If we fight against a nation that gains even temporary cyber superiority, our ships may be at increased risk of navigational data corruption, they may be compelled to slow their speed of movement while they await the outcome of the cyber battle. Again, this could pose a profound risk.

AI or Human or Both in the Cockpit?

The issue of effective human control in the cockpit burst into the public view following two tragic aviation accidents involving the Boeing Company, the world’s most trusted aerospace corporation. Boeing’s stock plummeted, prompting the U.S. president to publicly state that the company was too important to go bankrupt. What was the root cause of the tragedies and near bankruptcy? Over-automation and human deskilling of the pilots of the venerable Boeing 737. While details of the incident are too complex and voluminous to review here, suffice it to say that Boeing pursued software solutions to solve aeronautical engineering issues relating to the positioning of the engines on the wings. The software proved too complex and too automated, and the pilots of two planes were unable to overcome a computer-generated dive, resulting in the loss of all souls onboard both aircraft.

Compounding the problem was Boeing’s denial of the root problem after the first accident, trusting in the advanced software, until the second accident made the evidence incontrovertible. Thus, the question confronts us: For civilian airlines, how much automation and how much human skill? Where is the balance? And, with AI advances, there may be more pressure to replace pilots, but when one considers the possibility of hacking and AI hallucinations, a go-slower approach to preserve human-machine resilience in the cockpit seems the right path.

Similar questions confront the military. A debate has raged in the Air Force, Army, Navy and Marine Corps about the balance between manned and unmanned cockpits of the future. While we will have both going forward, the question of cybersecurity should give cause for pause. In the later years of the Afghanistan conflict, an advanced U.S. surveillance drone was downed by a relatively primitive ‘spoofing’ or hacking of the GPS signal.xxxvii No doubt the Air Force has hardened drone defenses against such primitive hacking, but hackers can upgrade their technical tool set, too, ad infinitum. One need only consider: Would a human reconnaissance pilot have allowed his/her aircraft to turn west and head over Iranian airspace? Not under any circumstances. Thus, we should ask: Is it wise to increasingly turn over surveillance to systems that, if we lose cyber superiority, we lose the surveillance fleet?

These are complex problems that will only grow more portentous as DOD works to integrate AI and ever-more robotic platforms into our frontline forces. Given the momentum phenomenon discussed before, it is urgent to slow the deployment of AI and automation and preserve more naturally skilled humans in more cockpits, while the longer-term implications of emerging technologies become clearer.

> Nuclear Forces Risk Curve in Age of AI/ Cyberwar

When the triad of nuclear deterrence (air, sea and land-based nuclear delivery systems) was first constructed, cyber conflict did not exist. Has the emergence of rapidly accelerating AI, combined with cyber conflict, shifted the risk curves, such that trust and resilience, and fundamental human control, may be at risk? As mentioned above, the Russians have already over-automated a nuclear-armed submarine. We need to carefully consider the arguments against ever making the next nuclear bomber unmanned. Again, a similar refrain should come to mind: Why take the bomber pilot out of the cockpit? Is this argument one that again privileges automation and faster decision-making? The decision to start a nuclear war to destroy an opposing country should be conducted at human speed with a premium on human trust (two or three persons in the cockpit with verified orders from the White House—not a hackable computer).

Given the increasing reality of cyberwar and the possibility that our nation could lose cyber superiority, might it be time to consider a radical possibility: that all the nuclear deterrent forces should be human guided, that nuclear missiles will be limited in range such that only a pilot or submarine captain who navigated within the maximum stand-off range could launch an attack of such missiles? What is the risk-trade calculus if an enemy hacked a single nuclear missile and redirected its course away from an enemy state to that of an ally? Again, humans in the cockpit or humans at the helm of a submarine, with limited-range nuclear missiles, reduce the surface area of attack, increase resilience, make silent failures unlikely, and provide a cognitive system that needs fewer patches and expensive cyber software upgrades. Minimally, DOD should resist efforts by OODA-Loop philosophers to integrate nuclear command and control into larger networks, especially if powered by increasingly capable AI.

Yet, this latter scenario seems to be under consideration in the latest technostrategic 2023 document coming out of the Pentagon, proposing the Joint All Domain Command and Control (JADC2) concept.xxxviii While I am not privy to highly classified nuclear deterrent discussions, it seems now is the time to begin IDD control talks, especially regarding nuclear-armed devices, with the Chinese and the Russians, rather than another attempt to make American retaliatory strike capability even faster by integrating these doomsday weapons into JADC2 digital architecture.

> Military SCADA

The threat of cyber insecurity regarding military Supervisory Control and Data Acquisition (SCADA) systems poses an existential risk for the nation’s security and the lives of servicemembers on the front line. To achieve resilience, humans must be put back in the control room at the breaker panel with the skill and knowledge needed to effect basic repairs. As the military increases reliance on AI, automation and robotics, what emerges is the proverbial black box of declining human understanding and the ability to explain. Already, explainability is a challenge of some significance for AI scientists, but for such a situation to develop in the military may be tantamount to dereliction of duty. Without questioning the assumptions of speed and efficiency, we risk trust and resilience as the influence of private tech and defense contractors increases, and simultaneously the human skill and understanding of military officers and enlisted personnel declines. Is this fated? Or once again a default choice?

As a former chief nuclear engineer, I knew my technicians, all sworn to defend our Constitution and not conflicted by corporate loyalties, could understand and mitigate failures on most ships’ critical systems. Now, on modern ships, the vulnerability of ship SCADA-like systems and those ashore, dependent on millions of IDDs, causes me to pause.

While it is impracticable to reestablish the human skill base and knowledge to provide backup operations for many ship systems, DOD must carefully consider the reinstallation of basic control systems to enable the ship or base to provide basic SCADA-like services, such as keeping the water running, the lights on, minimal propulsion and the ability to return to base—or what sailors on my ship referred to as the “Get-Home Box,” a bypass to the advanced electronics allowing sailors to drive the ship with basic electro-mechanical signals to the steering system and auxiliary propulsion. Although anachronistic sounding, in the face of loss of cyber superiority, these human-centric back up measures are becoming more logical.xxxix

> One Cloud or Many?

In the 1950s, the U.S. Navy planned to solve the complexity of digital networks with a single ship carrying a large mainframe computer to broadcast to the whole squadron. This centralized concept, analogous to today’s Cloud, was considered too vulnerable and replaced with distributed, independent computers on every ship, capable of operating ina completely stand-alone mode. Similarly now, a centralized Cloud computing solution for military operations produces the same obvious risk. The additional risk is as mentioned above: Who is essentially in control? Cloud technicians in Silicon Valley conflicted by corporate loyalties; the programmers of AI, which now controls the Cloud of military data; or at some minimum level, is control preserved under uniformed service members sworn to protect the Constitution?

Many studies indicate that warfare is evolving away from larger military platforms toward smaller and more numerous “swarms” to create more survivability and lethality. If we believe the computer and tactical kinetic battlefields have something in common, then might we need to reconsider by analogy that when seeking resilient, survivable computational storage capability, smaller and more numerous Clouds are better than bigger and singular? At one point, DOD was running almost 5,000 quasi-independent networks,xl which might be considered a swarm of networks.

Hopefully, somewhere in a deeply classified computer-storage wargame, senior decision-makers are considering the worst-case scenario of all-out cyberwar and the benefits or costs of swarm storage and computation strategy.

> AI/Cyber Age Conundrum

In the quest for speed and efficiency, do we risk compromising the trust and resilience of the U.S. military, as powerful AI combines with the tools of cyber conflict? Sometimes the aggressive pursuit of increased decision speed, as in OODA-Loop orthodoxy, is well justified. In other cases, several crucial systems were digitized and automated before the age of AI-powered cyberconflict came into focus, precipitating a tradeoff for speed and efficiency over trust and resilience. Keeping more humans in the loop and retraining them to regain lost skills may be a crucial strategy to improve the security of the nation and individual states in this era of AI cyberpower, characterized by its opaque calculations. The decision to shape, narrow or slow the trajectory of AI and automation and to preserve a modicum of human knowledge, skill and abilities will be unpopular in the defense industry. But asking hard questions of technical elites and reconsidering tradeoffs has a long history in our nation, and we are well justified in invoking this prudent tradition at the dawn of the AI/Cyber Age.

And we need to do so urgently. In September, according to The Hill, “Deputy Secretary of Defense Kathleen Hicks … touted a new initiative designed to create thousands of [autonomous] weapons systems powered by artificial intelligence, saying it will mark a ‘game-changing shift’ in defense and security as Washington looks to curtail China’s growing influence across the world. … [T]he new initiative, called Replicator, is part of a concentrated push at the Pentagon to accelerate cultural and technological change and gain a ‘military advantage faster’ over competitors. … [T]he Pentagon would work closely with the defense industry to field thousands of autonomous weapons and security systems across all domains in 18-24 months.”xli

Moreover, given the high-tech, digital-savvy Israeli Defense Force and our own CIA/NSA were completely surprised by a low-tech, human-centric attack of strategic proportions on October 7 (the 50th anniversary of the 1973 Yom Kippur war), is more digital tech the answer? The rush to digitize and replace humans with intelligent machines may be ill-advised at this time of strategic uncertainty, and a thoughtful, slower approach seems in order. Political leaders need to start asking the hard questions, now.

i Russell, Stuart, Human Compatible: Artificial Intelligence and the Problem of Control, New York: Viking-Random House, 2019, pp. 255-56, extended quote here: “The solution to this problem [preserving human autonomy] seems to be cultural, not technical. We will need a cultural movement to reshape our ideals and preferences towards autonomy, agency, and ability and away from self-indulgence and dependency—if you like, a modern, cultural version of ancient Sparta’s military ethos.” ii Kissinger, Henry, Eric Schmidt and Daniel Huttenlocher, “ChatGPT Heralds an Intellectual Revolution: Generative artificial intelligence presents a philosophical and practical challenge on a scale not experienced since the start of the Enlightenment,” Wall Street Journal, 24 February 2023, accessed here: https://www.wsj.com/articles/chatgpt-heralds-an-intellectual-revolution-enlightenment-artificial-intelligence-homo-technicus-technology-cognition-morality-philosophy-774331c6 iii The series of war games was funded by the Office of Secretary of Defense, Office of Rapid Fielding. Project leadership was shared between Dr. Peter Singer of Brookings and the NOETIC Corporation. iv Hagerott, Mark, “Lethal Autonomous Weapons Systems: Offering a Framework and Some Suggestions,” presented at the Geneva convening of the 2014 Convention on Certain Conventional Weapons (CCW), link to brief here: https://docs-library.unoda.org/Convention_on_Certain_Conventional_ Weapons_-_Informal_Meeting_of_Experts_(2014)/Hagerott_LAWS_ military_2014.pdf v Technology has been shown to be susceptible to what is called “momentum” or “technological lock-in,” wherein early decisions may gain a kind of inertia, and later efforts to redirect technology’s trajectory become all but impossible often with negative consequences for later generations. See Thomas Hughes’ study of electrical power networks and Paul David’s study of the QWERTY keyboard. vi The expanding realm of cyber insecurity is penetrating an increasing number of activities, from email servers, government databases, banks and critical infrastructure to now frontline weapons. See Heckmann, Laura, “Trustworthy Tech: Air Force Research Lab Looking at Uncertainties with Electronics,” National Defense, August 2023, pp. 28-30. vii The advocates for accelerating the acquisition of unmanned systems are many, but their acknowledgement of the potentially high costs of ensuring cyber security could be more candid. If the lifetime costs of these systems included never ending cybersecurity contracts, the argument to automate might be less compelling. In the first war game of the OSD series, for example, one senior level robot company executive exemplified this problem. When pressed about the cybersecurity of his company’s unmanned systems during an off-the-record meeting, the executive deferred cyber insecurity to software companies. He appeared to take little ownership of the potentially massive problem and offered that the “banks would be the first to solve the problem.” viii The definition used in this essay is a simplification of many longer attempts at explanation. A particularly thoughtful essay on the subject is by Joe Nye, Jr., See “Cyber Power” at: https://projects.csail.mit.edu/ecir/wiki/images/d/da/ Nye_Cyber_Powe1.pdf ix “Silent failures” are considered by some experts in the field to be the worst kind, potentially the most damaging, since you don’t know they occur. With a human in the loop, especially on physical-kinetic type platforms, a human is on scene and can more quickly identify if the platform or system is failing to follow the assigned tasks. For more on silent failures, see Dan Geer, 26 May 2013, interview, “The most serious attackers will probably get in no matter what you do. At this point, the design principal, if you’re a security person working inside a firm, is not failures, but no silent failures.” Accessed on 31 July 2013: http://newsle.com/article/0/77585703/ x Martin Libicki of the RAND Corporation has written extensively on the problem of cyber attribution and the differences with traditional theories of deterrence, which relied on more certain knowledge of a potential adversary’s military capabilities than might be possible in the case of cyber. xi Geer, Dan, 26 May 2013, interview with Newsle.com. Geer, a leading CIA executive, has noted that the complexity of our electronic netted systems may be the biggest challenge going forward, even before accounting for the determined attacks of a cyber rival. xii Luckenbaugh, Josh, “Algorithmic Warfare: DARPA Host Workshops to Develop ‘Trustworthy AI,’” National Defense, June 2023, pg. 7 xiii Heckmann, Laura, “Trustworthy Tech: Air Force Research Lab Looking at Uncertainties with Electronics,” National Defense, August 2023, pp. 28-29. xiv CJCS U.S. Army Gen. Martin E. Dempsey’s speech at Brookings, 27 June 2013, warned of the growing scope and dynamism of cyber warfare, which will only grow in significance. xv John Boyd exerted his greatest influence in the Air Force. In the Navy, RADM Wayne Meyer and VADM Art Cebrowski were the strongest advocates for automation and speed. Meyer was the lead architect of the AEGIS combat system, which was capable of autonomous/automatic weapon assignments and engagement (though the computer system was carried aboard a manned platform). Cebrowski is credited with developing the concept of Network-Centric Warfare, the idea that war would be fought between networks of high-speed computers and communications links. Interestingly, a subculture of the Navy, Rickover’s nuclear engineers, have been more reluctant to embrace ubiquitous networks, OODA-Loop speed and Network-Centric Warfare as have other communities. Submariners generally tend to value the independence of command and distributed, non-netted warfare. See article by Michael Melia, “Michael Connor, Navy Vice Admiral, Calls For Submarine Commanders’ Autonomy,” accessed 17 July 2013: http://www.huffingtonpost. com/2013/07/17/michael-connor-navy_n_3612217.html xvi We have seen this momentum effect in DOD’s R&D/acquisition system. See Donald McKenzie, Inventing Accuracy: An Historical Sociology of Nuclear Missile Guidance, Cambridge: MIT Press, 1990. xvii The literature regarding Col. John Boyd is extensive. For a lengthy treatment, see Robert Coram, Boyd: The Fighter Pilot Who Changed the Art of War, New York: Hachette Book Group, 2010. xviii Cranberry, Sean, Special Report, Part 1 of 7: “Joint All-Domain Command, Control A Journey, Not a Destination,” National Defense, July 2023. xix Cyber resilience is becoming something of a watchword. Perhaps the most compelling definition is: “An operationally resilient service is a service that can meet its mission under times of disruption or stress and can return to normalcy when the disruption or stress is eliminated,” on pg.1 in “Measuring Operational Resilience,” Software Engineering Institute, Carnegie Mellon University. But we should ponder: If a system was even temporarily hacked, or trust broken, the issue of resilience may become moot, because if the ship were to run aground or the aircraft crash, even should the computer programmers regain cyber control, the system is irreparably damaged even after a brief loss of cybersecurity. In these cases, we need to maintain and reinsert human operators and human skill … to stay off the rocks, so to speak. xx Milley, Gen. Mark, Chairman of the Joint Chiefs. “Strategic Inflection Point,” Joint Forces Quarterly, 110, 3rd Quarter, 2023, pg. 6. xxi National Science Foundation, “Artificial Intelligence (AI) Research Institutes, Program Solicitation, NSF 23-610, Theme 3,” accessed 17 August 2023: https://www.nsf.gov/pubs/2023/nsf23610/nsf23610.htm?WT.mc_%20ev=click&WT.mc_id=&utm_medium=email&utm_source=govdelivery xxii In the field of technology studies, a key concept is technological momentum, wherein technological programs build up financial and social momentum, and sometimes propel themselves well beyond the size and scope that could be justified by dispassionate analysis. For discussion of this concept, see Thomas Hughes, Networks of Power, and Donald MacKenzie, Inventing Accuracy, the latter providing evidence of nuclear missile improvements, which in the later Cold War gained a momentum provided by R&D/acquisition programs that exceeded most reasonable requirements when viewed in hindsight. xxiii Peterson, Dale. “People are Not THE Answer,” Digital Bond, 1 May 2013. See: http://www.digitalbond.com/blog/2013/05/01/people-are-not-the-answer/ xxiv DOD has announced that CYBERCOM will expand significantly, both with uniform personnel and contractors in an effort to provide better and more numerous experts to run cyber defense. xxv The failure of human technicians to audibly monitor the centrifuges at the Natanz nuclear site was a key element in the STUXNET attack. Alert human operators would have heard the effects of the attack and could have stopped it, but they remained fixated on their digital and hacked instruments and were oblivious of the attack underway. See Mark Hagerott, “Stuxnet and the Vital Role of Critical Infrastructure Engineers and Operators,” International Journal of Critical Infrastructure Protection, (2014), v7, pp 244-246. xxvi While the veracity of such articles may be in doubt, it is interesting to note that reports out of Russia indicate that in the wake of the Snowden disclosures, the Russians are reverting some critical communications to manual, human- centric typewriters and human-readable paper, unmediated by any electronic devices. This was a similar measure adopted by Osam Bin Laden and Al Qaida, when they reverted to human messengers for their communications. The American reader’s reaction is most likely that we are better than the Russians and Al Qaida. But in the face of a determined cyberpower or following treacherous actions by an insider, such measures should at least be trained and planned as a contribution to overall military operational resilience. xxvii See discussions of “resilience,” especially recent studies conducted by Carnegie Mellon University, Software Engineering Institute. xxviii The Resilient Navigation and Timing Foundation has worked assiduously to call attention to the cyber vulnerabilities and existential threat to navigation in our current GPS dependent condition. See: https://rntfnd.org/ xxix The tendency of billions of humans to prefer faster, clipped, easier communication via Twitter, texting and hyperlinks is now showing potential signs of deskilling cognitive functioning. See Nicholas Carr, The Shallows: What the Internet is Doing to Our Brains, New York: Norton, 2010. There is growing evidence that naval officers are falling into the same deskilling pattern to destructive effect. See USS Guardian grounding report: http://www.cpf.navy.mil/foia/reading-room xxx Haney, ADM Cecil D. Report by Commander, Pacific Fleet, 22 May 2013, https://www.cpf.navy.mil/FOIA-Reading-Room/ xxxi The ability to hack any number of medical devices has now been established, and the FDA is moving quickly to control their proliferation. See Sun, Lena H. and Dennis, Brady, “FDA, facing cybersecurity threats, tightens medical-device standards,” Washington Post, 13 June 2013 accessed on 31 July 2013 here: http://articles.washingtonpost.com/2013-06-13/national/39937799_1_passwords-medical-devices-cybersecurity xxxii Study is being led by the Office of the Undersecretary of Defense for Acquisition and Sustainment, Dr. David Restione, Director of DOD Wearable Pilot Program. See Indo-Pac Conference, 2022, pg 11, accessed: https://ndiastorage.blob.core.usgovcloudapi.net/ndia/2022/post/agenda.pdf xxxiii North Dakota was the first state in the Union to require cyber PK-20 education standards and also to propose a new Digital Cyber Land Grant Act. See Anderson, Tim, CSG Midwest Newsletter, 28 June 2023, link here: https://csgmidwest.org/2023/06/28/north-dakota-is-first-u-s-state-to-require-cybersecurity-instruction-in-k-12-schools/. The North Dakota University System the first to propose a new Digital Cyber Land Grand Act. See Hagerott, Mark, “Time for a Digital Cyber Land Grant University System,” Issues in Science and Technology, Winter 2020, xxxiii, accessed here: https://issues.org/time-for-a-digital-cyber-land-grant-system/ xxxiv Morison, Elting, Men, Machines and Modern Times, Cambridge: MIT Press, 1966. xxxv “Pause Giant AI Experiments: An Open Letter,” 22 March 2023, See: https://futureoflife.org/open-letter/pause-giant-ai-experiments/ xxxvi Huet, Natalie, “What is Russia’s Poseidon nuclear drone and could it wipe out the UK in a radioactive tsunami?” Euro News, 5 May 2022, accessed here: https://www.euronews.com/next/2022/05/04/what-is-russia-s-poseidon-nuclear- drone-and-could-it-wipe-out-the-uk-in-a-radioactive-tsun. See also, Kaur, Silky, “One nuclear-armed Poseidon torpedo could decimate a coastal city. Russia wants 30 of them,” Bulletin of the Atomic Scientists, June 14, 2023, accessed here: https://thebulletin.org/2023/06/one-nuclear-armed-poseidon-torpedo- could-decimate-a-coastal-city-russia-wants-30-of-them/ xxxvii Shane, Scott and David Sanger. “Drone Crash in Iran Reveals Secret U.S. Surveillance Effort,” New York Times. 7 December 2011. xxxviii Cranberry, “Joint All-Domain Command, Control A Journey, Not a Destination.” xxxix Fahmida Y. Rashid, “Internet Security: Putting the Human Back in the Loop,” 19 April 2012, See: http://securitywatch.pcmag.com/security/296834-internet-security-put-humans-back-in-the-loop xl Dempsey, General Martin. Brookings Institution, 27 June 2013. xli Dress, Brad, “Pentagon’s New AI Drone Initiative Seeks Game-Changing Shift to Global Defense,” 9 September 2023, The Hill.

Mark Hagerott, PhD

Hagerott serves as the Chancellor of the North Dakota University System. Previously, he served on the faculty of the United States Naval Academy as an historian of technology, a distinguished professor and the deputy director of the Center for Cyber Security Studies. As a certified naval nuclear engineer, Hagerott served as chief engineer for a major environmental project de-fueling two atomic reactors. Other technical leadership positions include managing tactical data networks and the specialized artificial intelligence AEGIS system, which led to ship command. Hagerott served as a White House Fellow and studied at Oxford University as a Rhodes Scholar. His research and writing focus on the evolution of technology and education. He served on the Defense Science Board summer study of robotic systems and as a non-resident Cyber Fellow of the New America Foundation. In 2014, Hagerott was among the first American military professors to brief the Geneva Convention on the challenge of lethal robotic machines and to argue the merits of an early arms control measure.