To Maximize AI’s Public Benefit

OPINION

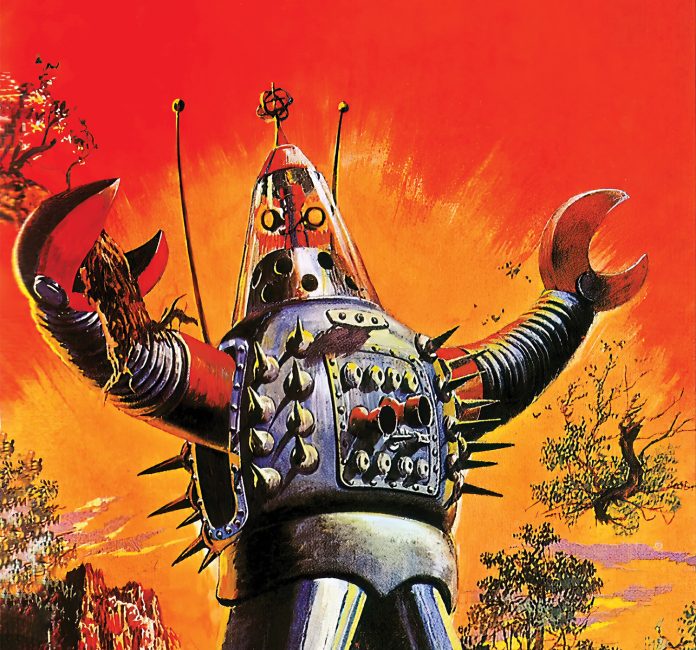

Blizzards of hype surround artificial intelligence (AI) and threaten to prevent society from attaining its benefits. Concerns range from worries that AI might become Skynet from the “Terminator” movies—or an evil AI called The Entityi from the recent movie “Mission: Impossible-Dead Reckoning Part One”—bent on destroying humanity, to more down-to-earth concerns about job losses. Like any technology, some actors will use it for nefarious purposesii and concerns about discriminationiii have also been raised.

In reality, though, AI is poised to bring massive benefits, including protecting us from cyberattacks, increasing our health,iv responding to emergenciesv and even helping manage personal finances.vi In order to enjoy the advantages AI is poised to provide, we need to look beyond the hype—beyond calls for regulation coming loudly from a few computing luminaries—and focus on how innovation, technical discovery and entrepreneurship can be encouraged to enhance AI technologies and drive growth.

AI Isn’t Out to Take Your Job

A big part of the hype surrounding AI is that it’s going to causevii large-scale job loss.viii Every technology that makes humans more efficient and can perform work that is currently done by humans changes the workplace. This isn’t new. Concerns were raised about the cotton ginix—a device that separated parts of the cotton plant, a burdensome task previously performed by humans—at the beginning of the industrial revolution. Printing presses, which required a time- consuming process of manually typesetting each page,x letter by letter—and numerous other technologies—were decried as a threat to jobs.

Of course, the reality is that, while some jobs changed and workers were displaced and moved into other jobs, there is, and was, no long-term mass unemployment caused by these (or other) technologies. In fact, technologies have increased Americans’ standard of living by increasing the purchasing power of each hour of work. As Robert Tracinski aptly explains,xi the market forces created by new technologies’ efficiencies increase the value of and demand for human labor.

Historical evidence, thus, provides a strong basis for an optimistic outlook, and history is the best guide we have to predict the future.

Protecting, Not Pernicious

The concern of AI being used as a weaponxii or by criminals is also commonly raised. This, though, is little different from concerns that might be raised about any tool. The same hammer that can be used to construct a building can also be used to smash a window. A backhoe can help create, or rapidly destroy, landscaping.

Because of its power, AI has, and will continue to be, used by governments, militaries, criminal organizations and numerous other entities. This, however, isn’t a reason to try to stop AI through regulation. Quite the opposite. We need to avoid overregulation to allow those developing AI for positive and protective purposes to keep pace with those with criminal and other forms of villainous intent—as well as with nations seeking to use AI to assert dominance over us. Neither criminals nor foreign states are likely to be deterred significantly by our restrictive regulation of AI. In fact, they would benefit from it.

Regulated Already

The ongoing national discussion about AI regulation gives the impression that this technology is being developed and deployed in an unregulated “wild west.” In reality, most concerns have already been addressed, which is why calls and efforts for regulation at the federal, state and municipal levels are more about political and commercial hype than substance.

Companies that might use AI for hiring are already covered by a variety of laws that prevent discrimination, for example. Regardless of whether an AI program or a human discriminates, the legal protections already exist. If anything, it will be easier to enforce laws against AI systems, based upon outcomes, as they make data-driven decisions and have no mechanism or incentive to try to cover up their conduct.

The same is true across numerous other areas of concern. Some laws may require limited modification—for example, to apportion liability for an AI’s conduct between its developer and an operator. However, these are minor changes to existing laws, not a new regulatory regime specifically created for AI.

Nor should we want AI to be regulated separately. Different standards for humans and AI activities, whether more or less restrictive, will inherently create loopholes that may prevent laws and regulations from being effective. For example, control of AI activities might be exempted from certain general regulations if AI-specific regulations exist, or general laws might be preempted by AI-specific laws. If these AI-specific laws can be subverted by small technical changes, which change how they are applied, or rendered obsolete by technological advancements, the regulatory intention of both sets of laws may not be achieved.

Well-written laws focusing on preventing harm and encouraging socially beneficial outcomes, for example, shouldn’t need to be rewritten for AI. On the other hand, laws that focus intently on specific ways of preventing or producing outcomes might benefit from review and revision, even without considering AI.

Regulation Benefits the Big

Some of the companies developing AI are calling for regulation.xiii This is good for them and bad for everyone else.xiv Regulation transfers responsibility from companies to regulators and may remove or limit company liability for product-caused damage, if businesses can show they are following the regulations.

This is a recipe for irresponsible conduct. While some laws are needed to prevent developers from contractually avoiding liability for product failures, too much regulation can remove responsibility for AI failures, creating a moral hazard and promoting a lack of accountability.

AI regulation may also serve to limit the number of firms able to compete. New entrants, such as startups and other small businesses, may have difficulty understanding and complying with the regulations. They may also lack the financial and legal resources required to do so. Preventing new entrants into technology markets favors established firms while hampering technological advancement and disadvantaging society at large.

Regulation is Slow

Another important issue is the rapid pace of technological change. Regulations that focus on producing beneficial outcomes or preventing harmful ones might be helpful. However, those that take a more detailed approach to regulating technological design, development and operations will typically become outdated quickly but still remain in force. This could block innovation and undermine the development of societally beneficial technologies. Also, the longer regulations remain in force, the more likely it is that well-resourced firms will be able to invent workarounds to bypass the regulations, rendering them ineffective and subverting the lawmakers’ and regulators’ goals.

Liability Challenges

Instead of seeking to regulate AI separately, policymakers and lawmakers should focus on answering questions and clarifying laws about whether only humans can create works, make decisions and take actions. Questions abound, for example, regarding the protection,xv authorshipxvi and ownershipxvii of AI-generated intellectual property. These should be settled through a public lawmaking process that allows all concerned parties to be heard.

There is, similarly, a need to ensure a consistent and fair split of responsibility for AI’s systemic failures. Software firms should not be allowed to transfer complete liability to users for the failure, acts and omissions of products that their users do not—and cannot possibly—understand fully, due to not having access to the underlying code and data.

However, in most cases, holding the AI developer solely responsible is not appropriate, since the configuration, implementation, prompting, lack of proper testing—and the decision to use AI at all for a given application—often rest in the hands of another party. These things can cause the system to fail, even without a defect. We also need to make sure that harmed consumers do not end up in the middle of a courtroom battle between AI developers and implementors that leaves the injured responsible for determining and proving which party is at fault.

Good Regulation

Regulation should focus on outcomes, such as promoting safety, preventing discrimination and protecting consumers. In each case, laws should require or proscribe outcomes and identify how these outcomes will be determined to have occurred. Specificity in conduct, though, is unhelpful. Imagine that we develop regulations outlawing murder that are narrowly tailored to certain weapons. We might proscribe murder by gun, knife or using a motor vehicle. If only these things are proscribed, a would-be murderer could bypass the law—and punishment—by simply choosing a different weapon, such as a baseball bat.

Laws may also be needed to ensure that records are retained to aid in the determination of responsibility. When humans commit acts or make mistakes, they (and other witnesses) can provide testimony. Since AI systems cannot be sworn in to testify, this will not be the case with AI decisions. It is, thus, reasonable to ensure that equivalent evidence, such as logs and other recordings of decisions and actions, is maintained. This will be especially important in determining fault among a technology developer, implementor and the possible contributory acts or negligence of an end-user.

We should also create regulations that help support technological development. One area of need is protection for the open-source community. We need to make sure that individual contributors to open- source projects cannot be held personally liable for contributions made in good faith that result in injury. Companies that benefit from free access to open-source projects—in particular, firms that repackage or use them to provide services to others—must assume the risk (and take action to mitigate it) of the free software they are utilizing. This protection is needed against both civil and criminal liabilities.

Ad Astra

AI has the potential to dramatically change our society for the better. It can help relieve humans of burdensome and repetitive tasks. It can improve and enable the personalization of entertainment options.xviii AI can help care for the sick and elderly.xix AI can aid the productivity and creativity of authors, directors and artistsxx—and expand the possibilities available to them.

In order to deliver these benefits, AI must be allowed to grow in use and thrive. Regulations that prevent its deployment in order to protect special interests that lobbied for protection from technological advancement, regulations that place AI users at a disadvantage to those who use humans for similar tasks, and regulations that favor entrenched software developers are all contrary to the long-term public good. The next five years will be critical to human development and progress in numerous ways. One of the key decisions that each jurisdiction must make will be about how they treat AI.

These decisions may truly affect the proverbial ‘fate of nations’ with AI-embracers thriving and advancing, while AI-luddites find themselves left behind. ◙

References

I https://www.washingtonpost.com/technology/2023/07/28/mission-impossible-ai-not-realistic/

ii https://www.foxnews.com/world/hong-kong-arrests-6-loan-fraud- scheme-using-ai-deep-fakes

iii https://www.npr.org/2023/01/31/1152652093/ai-artificial-intelligence- bot-hiring-eeoc-discrimination

iv https://www.foxnews.com/opinion/i-love-ai-because-add-decades-our-lives

vi https://www.foxbusiness.com/technology/consumers-want-ai-help- manage-their-personal-finances-study

ix https://www.asme.org/topics-resources/content/how-the-cotton-gin-started-the-civil-war

x https://www.history.com/topics/inventions/printing-press

xii https://theconversation.com/artificial-intelligence-is-the-weapon-of-the- next-cold-war-86086

xiii https://www.nytimes.com/2023/05/16/technology/openai-altman- artificial-intelligence-regulation.html

xv https://www.jdsupra.com/legalnews/can-inventions-created-using- artificial-8457151/

xvi https://www.jdsupra.com/legalnews/no-copyright-protection-for- works-6704892/

xix https://www.cnbc.com/2023/07/12/the-ai-revolution-in-health-care-is- coming.html

xx https://www.dailybreeze.com/2023/07/09/dont-crush-the-potential-of- ai-tech/

Jeremy Straub, PhD, is an Assistant Professor in the North Dakota State University Department of Computer Science and a NDSU Challey Institute Faculty Fellow. His research spans a continuum from autonomous technology development to technology commercialization to asking questions of technology-use ethics and national and international policy. He has published more than 60 articles in academic journals and more than 100 peer-reviewed conference papers. Straub serves on multiple editorial boards and conference committees. He is also the lead inventor on two U.S. patents and a member of multiple technical societies.