Seven years ago, Maj. Gen. Robert Latiff and I wrote an opinion article for the Wall Street Journal, titled “With Drone Warfare, America Approaches the Robo-Rubicon.” The Week, which reviews newspaper and magazine stories in the U.K. and U.S., highlighted the article in its “Best Columns-US” section. “If you think drone warfare has created some tricky moral dilemmas, said [Latiff and McCloskey],” The Week began its précis, “just wait until we start sending robotic soldiers into battle.”

“Crossing the Rubicon,” of course, refers to Julius Caesar’s irrevocable decision that led to the dissolution of the Roman Republic, a limited democracy, and ushered in the Roman Empire, which would be run by one or more dictators (aka, emperors). On January 10, 49 BC, General Caesar led a legion of soldiers across the Rubicon River, breaking Roman law and making civil war inevitable. The expression has survived as an idiom for passing the point of no return.

Our contention in the article was that full lethal autonomy—that is, empowering robotic weapons systems with the decision to kill humans on the battlefield—crosses a critical moral Rubicon. If machines are given the legal power to make kill decisions, then it inescapably follows that humanity has been fundamentally devalued. Machines can be programmed to follow rules, but they are not persons capable of moral decisions. Surely taking human life is the most profound moral act, which, if relegated to robots, becomes trivial, along with all other moral questions.

Not only does this change the nature of war, but human nature and democracy are put at risk. This is not merely a theoretical issue but a fast-approaching reality in military deployment.

Drones are unmanned aerial vehicles that—along with unmanned ground, underwater and eventually space vehicles—are crude predecessors of emerging robotic armies. In the coming decades, far more technologically sophisticated robots will be integrated into American fighting forces. As well, because of budget cuts, increasing personnel costs, high-tech advances, and international competition for air and technological superiority, the military is already pushing toward deploying large numbers of advanced robotic weapons systems.

There are obvious benefits, such as greatly increased battle reach and efficiency, and most importantly the elimination of most risk to our human soldiers.

Unmanned weapons systems are already becoming increasingly autonomous. For example, the Navy’s X-47B, a prototype drone stealth strike fighter, can now navigate highly difficult aircraft-carrier takeoffs and landings. At the same time, technology continues to push the kill decision further from human agency. Drones are operated by soldiers thousands of miles away. And any such system can be programmed to fire “based solely on its own sensors,” as stated in a 2011 U.K. defense report. In fact, the U.S. military has been developing lethally autonomous drones, as the Washington Post reported in 2011.

Lethal autonomy hasn’t happened—yet. The kill decision is still subject to many layers of officer command, and the U.S. military maintains that “appropriate levels of human judgment” will remain in place. However, although there has not been a change in official policy, it is fast becoming a fantasy to maintain that humans can make a meaningful contribution to kill decisions in the deployment of drones (or other automated weapons systems) and in robot-human teams.

Throughout our military engagements in Kosovo, Iraq and Afghanistan, the U.S. enjoyed complete air superiority. This enabled complex oversight of drone attacks in which there was the luxury of sufficient time for layers of legal and military authority to confer before the decision to fire on a target was made. This would not exist in possible military engagements with Russia, China or even Iran. The choice would be lethally autonomous drones or human pilots—and significant casualties.

Aside from pilot risk, consider the cost differential. Each new F-35 Joint Strike Fighter jet will cost about $100 million and an additional $6 million per year to train an Air Force pilot. In contrast, each hunter-killer drone (MQ-9 Reaper) costs about $14 million.

Military verbiage has shifted from humans remaining “in the loop” regarding kill decisions, to “on the loop.” Soon technology will push soldiers “out of the loop,” since the human mind cannot function fast enough to process the data that computers digest instantaneously. Future warfare won’t be restricted to single drones but masses of robotic weapons systems communicating at the speed of light.

Recently, the Defense Advanced Research Projects Agency (DARPA), which funds U.S. military research, began exploring how to design an aircraft carrier in the sky, from which waves of fighter drones would be deployed. These drone swarms will be networked and communicate with each other instantaneously. How will human operators coordinate kill decisions for several, if not dozens, of drones simultaneously?

Third Offset Strategy

U.S. defense secretary Ashton Carter terms the Pentagon’s new approach to deterrence as the “third offset strategy.” The first offset in the post-WWII era, which asserted American technological superiority, was the huge investment in nuclear weapons in the 1950s to counter Soviet conventional forces.

Twenty years later, after the Russians caught up in the nuke race, the U.S. reestablished dominance via stealth bombers, GPS, precision-guided missiles and other innovations. Now that the Russians and Chinese have developed sophisticated missiles and air defense systems, the U.S. is seeking advantage through robotic weapons systems and autonomous support systems, such as drone tankers for mid-air refueling.

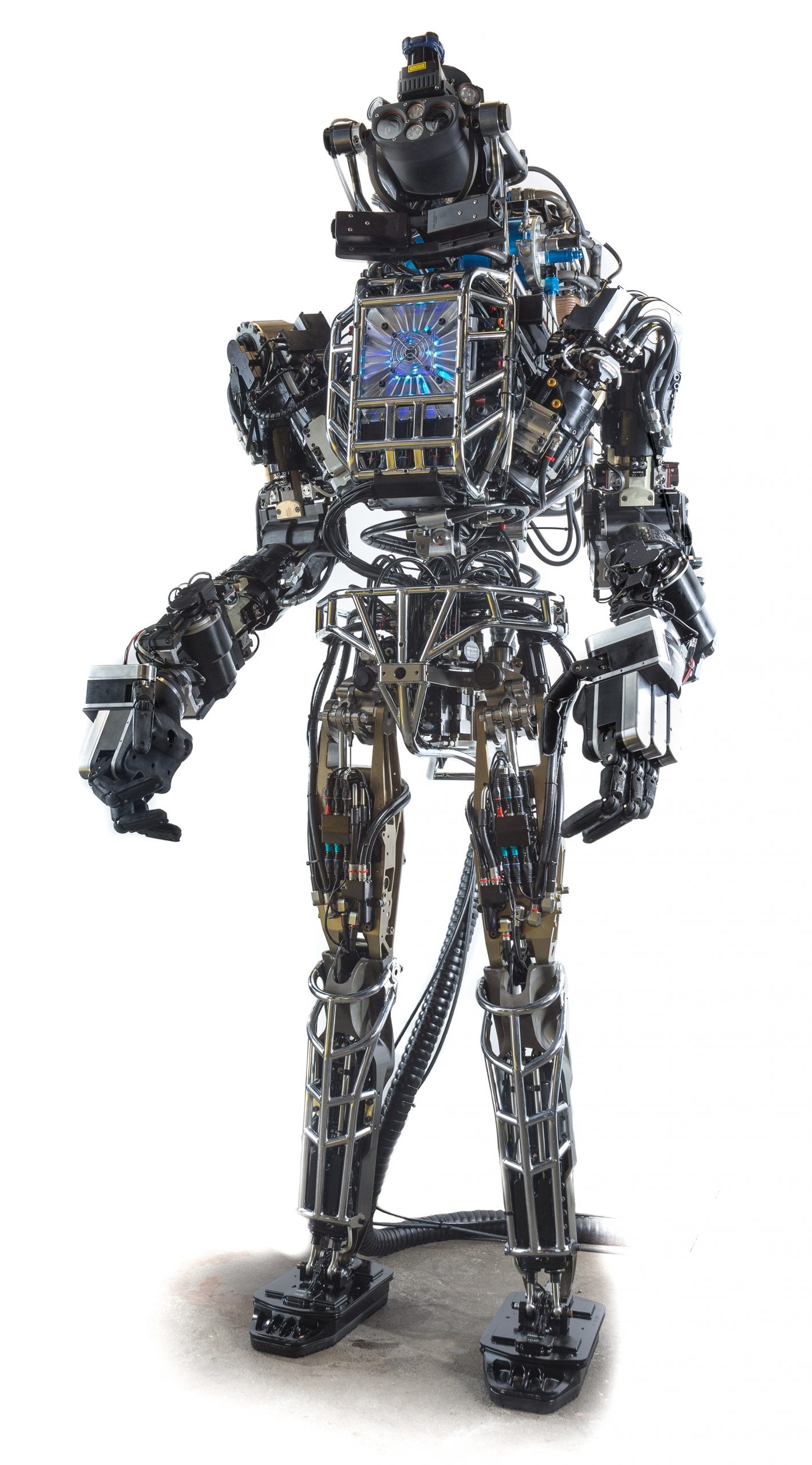

What’s remarkable is how publicly the defense department is talking about robotic autonomy, including human-robot teams and human-machine enhancements, such as exoskeletons and sensors embedded in human warfighters to gather and relay battlefield information. Easily accessible online are the “The Unmanned Systems Integrated Roadmap FY2011-2036” and “USAF Unmanned Aircraft Systems Flight Plan 2009-2047,” which articulate the integration of unmanned systems in every aspect of the U.S. military’s future. Given the pace at which AI is developing, this integration will accelerate.

How then are fewer soldiers supposed to maintain human veto power over faster and massively greater numbers of robotic weapons on land, underwater and in the skies?

As we wrote, “When robots rule warfare, utterly without empathy or compassion, humans retain less intrinsic worth than a toaster—which at least can be used for spare parts.” The rejoinder is that robots would do better than humans on the battlefield.

For example, Ronald Arkin, PhD, the director of the Georgia Institute of Technology’s mobile robot lab, is programing robots to comply with international humanitarian law. Perhaps someday, as a result, an autonomous weapon might be able to distinguish between a small combatant and a child, resolving one crucial challenge. Let’s hope the enemy doesn’t wear masks—or put them on children—to confuse the robot’s facial recognition software.

Other computer scientists are focusing on machine learning as the route to making robots, in their view, better ethical decision-makers than humans. At one lab, researchers read bedtime stories to robots to teach them right from wrong. Apparently Dr. Seuss was R2-D2’s favorite author.

These endeavors, however, are beside the point since a robot’s actions are not moral, even if it passes the Turing test and behaves so intelligently it seems indistinguishable (except for appearance, for now) from humans. Robotic actions are a matter of programming, not moral agency. They will hunt solely by sensor and software calculation.

In the end, “death by algorithm is the ultimate indignity.”

National Discussion & DARPA

Over 35 years ago, a scholar noted the basic problem regarding new technologies in the Columbia Science and Technology Law Review. Before development, not enough is known about risk factors to regulate the technology sensibly. Yet after deployment, it’s too late since the market penetration is too great to reverse usage.

In this case, however, there is enough legitimate concern about lethally autonomous weapons systems to warrant serious consideration, and deployment has not yet occurred. A significant step towards consideration was taken in 2014 with the publication of a report by the National Research Council and National Academy of Engineering, at the request of DARPA, “Emerging and Readily Available Technologies and National Security.” The report studied the ethical, legal and societal issues relating to the research, development and use of technologies with potential military applications. Maj. Gen. Latiff served on the committee that focused on militarily significant technologies, including robotics and autonomous systems.

The report cited fully autonomous weapons systems that have already been deployed without controversy. Israel’s Iron Dome antimissile system automatically shoots down barrages of rockets fired by Hamas, a Palestinian terrorist organization. The Phalanx Close-In Weapons System protects U.S. ships and land bases by automatically downing incoming rockets and mortars. These weapons would respond autonomously to inbound manned fighter jets and make the kill decision without human intervention. However, these systems are defensive and must be autonomous since humans can’t react fast enough. Such weapons don’t pose the same moral dilemma as offensive weapons since we have a fundamental right to self-defense.

Also mentioned were offensive weapons that could easily operate with complete lethal autonomy, such as the Mark 48 torpedo and iRobot, which is equipped with a grenade launcher. The report sets out the framework for initiating a national discussion, such as whether such autonomous systems could comply with international law.

However, if machines are deployed to seek out and kill people, there is no basis for humanitarian law in the first place. Every individual’s intrinsic worth, which constitutes the basis of Western civilization, drowns in the Robo-Rubicon.

How much intrinsic worth does a machine have? None. Its value is entirely instrumental. We don’t hold memorial services for the broken lawnmower. At best we recycle. There is no Geneva Convention for the proper treatment of can openers or even iPhones. Once lethal autonomy is deployed, then people can have no more than instrumental value, which means that democracy and human rights are mere tools to be used or discarded as the ruling classes see fit.

The answer to the dilemma lethal autonomy poses, to be clear, does not involve a retreat from technology but the securing of sufficient advantage that the U.S. can leverage international conventions on the military uses and proliferation of lethal autonomy and other worrisome emerging technologies.

The wider importance of lethal autonomy becomes clear in considering the enormous social threat that automation poses. On the horizon is massive job displacement via automated taxis, trucks and increasingly sophisticated task automation affecting most employment arenas. Already in Japan there is a fully autonomous hotel without a single human worker. In many states, truck driver is the most common job. What will hundreds of thousands of ex-drivers, averaging over 50 years of age, do once autonomous transportation corridors are created? True, there’s a shortage of neurosurgeons—at least for now.

IBM Watson, the artificial intelligence (AI) system that famously beat the world’s top Go master last March, then released a financial robo-adviser for institutional clients. Not only are human financial advisers getting nervous, so are professionals throughout finance due to the proliferation of robo-advice. And the scenario is similar to lethal autonomy in that these tools are marketed as assistive—i.e., with human professionals in the loop gaining productivity. But how long will that last as AI evolves and faster computer chips are developed? IBM now offers free access to anyone to a Cloud version of quantum computing for open-source experimentation.

As AI becomes increasingly advanced, more functions will be done better, faster and cheaper by machines. Already, autonomous robots are performing surgery on pigs. Researchers claim that robots would outperform human surgeons on human patients, reducing errors and increasing efficiency.

Some experts argue that the “jobless future” is a myth, that “when machines replace one kind of human capability, as they did in the transitions from … freehold farmer, from factory worker, from clerical worker, from knowledge worker,” wrote Steve Denning in his column at Forbes.com, “new human experiences and capabilities emerged.”

No doubt this will be true to some extent as technology facilitates fascinatingly interesting and valuable new occupations, heretofore unimaginable. But the problem isn’t that machines are replacing “one kind of human capability,” but that robots threaten to replace almost all of them within a short period of time.

There are two questions: What will happen to our humanity in big automation’s tsunami, and who (or what) does this technology serve?

Regarding our humanity, recent trends are disturbing. In medicine, not only are jobs at risk in the long run, but robots will increasingly make ethical and medical decisions. Consider the APACHE medical system, which helps determine the best treatment in ICU units. What happens when the doctor, who is supposed to be in charge, decides to veto the robo-advice? If the patient dies, will there not be multi-million dollar lawsuits—and within seconds once the law profession is roboticized (thereby replacing rule of law with regulation by algorithm)? In short, in this arena and elsewhere, are we outsourcing our moral and decision-making capacity?

“No one can serve two masters,” said Jesus in an era when children were educated at home, learning carpentry (to choose a trade at random) from their father. Today, increasing numbers of children—now a third, according to a survey in the U.K.—start school without basic social skills, such as the ability to converse, because they suffer from a severe lack of attention and interaction with parents who are possessed by smartphones. Technology has become the god of worship, and kids are learning they are far less important than digital devices. How much will this generation value—or even know—their humanity and that of others? Is it not “natural” in this inverted world to completely cede character and choice to the Matrix?

“Humans are amphibians—half spirit and half animal,” wrote C.S. Lewis. “As spirits they belong to the eternal world, but as animals they inhabit time.” Machines can support both spheres—if intelligently designed according to just principles with people maintaining control. This would seem common sense, but that is becoming the rarest element on the periodic table.

Tally-Ho & Tally Sticks

Millennials and succeeding generations will remake the world via digital technology. Big data and big automation might cure cancer, reverse aging, increase human intelligence and solve environmental issues. Imagine a war where few die or lose limbs. These wonders and more seem more than plausible in what many see as a dawning utopia. And it’s not likely to be an “either/or.” A kidnapped child will be located in minutes and the same surveillance tools might greatly restrict personal freedoms.

Certainly there will be huge economic and creative opportunities—for some. Experts predict that robot applications will render trillions of dollars in labor-saving productivity gains by 2025. Meanwhile, an Oxford University study in 2013 predicted that about half of jobs in the U.S. are vulnerable to being automated in the near future. If, as seems likely, jobs destroyed greatly outnumber jobs created, what does society do with the replaced?

Some can retrain or transfer skills, but most might become permanently jobless. It’s unlikely that many former taxicab drivers or even surplus middle-aged lawyers, as examples, could be re-purposed for most digital-based jobs—as those positions decline in number, too.

Consider the fate of tally sticks, which are notched pieces of wood used from prehistoric times to keep accounts (ergo, “tallies”). In 1826, England’s Court of Exchequer began transferring records from these sticks to ink and paper. By 1834, there were tens of thousands of unused tally sticks, which were disposed of in a stove in the House of Lords. There were so many of these suddenly useless carbon-based units that the fire spread to the wood paneling and ultimately burned down both the House of Lords and the House of Commons.

If the current presidential election cycle has shown anything, it’s that there is already growing dissatisfaction among the majority of Americans, which could spark a social conflagration.

Nonsense, some might argue, Americans take care of their own. Perhaps, but our fundamental commitment to the common good might disintegrate.

The United States Constitution was founded on the Judeo-Christian belief in the intrinsic worth of every individual, as articulated eloquently in the Declaration of Independence: “We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty and the pursuit of Happiness.” True, it took a century to outlaw slavery and another hundred years to eliminate the legal barriers to racial equality. But justice prevailed precisely because injustice contradicts the nation’s founding principles.

There was an inevitable logic to the civil rights movement.

The question now is whether full lethal autonomy destroys that foundation. If technology matters more than people, then rights are completely “alienable.” If software renders human life expendable, then it is a much smaller moral leap to indifference towards those replaced by automation. Without a career and the ability to earn a living and accumulate enough resources to start a business, there is neither liberty nor any pursuit of happiness. Ironically, a proposal that is gaining support in Silicon Valley—where automation is being spearheaded—is a basic guaranteed income. This might relieve some guilt, but it is neither affordable nor desirable. To work is essential to developing human potential. In fact, 78 percent of Swiss voters rejected a guaranteed-income proposal on a national referendum on June 5.

We wrote the original article in The Wall Street Journal with urgency to provoke discussion of lethal autonomy (tally-ho! for robots) as a moral pitfall and gateway, otherwise it will soon become a fait accompli.

The evening after crossing the Rubicon, Caesar dined with his officers and uttered the famous phrase, “The die is cast.” Ominous words for our future—if we fail to assert our humanity.

Every individual’s intrinsic worth drowns in the Robo-Rubicon.

USAF Maj. Gen. (Ret.) Robert H. Latiff, PhD, is an adjunct professor at the University of Notre Dame and George Mason University with a PhD in Materials Science from the University of Notre Dame. Latiff served in the military for 32 years. Assignments included Commander of the NORAD Cheyenne Mountain Operations Center and also Director, Advanced Systems and Technology and Deputy Director for Systems Engineering, National Reconnaissance Office. Since retiring in 2006, Latiff has consulted for the U.S. intelligence community, corporations and universities in technological areas, such as data mining and advanced analytics. He is the recipient of the National Intelligence Distinguished Service Medal and the Air Force Distinguished Service Medal. Latiff’s first book, Future War: Preparing for the New Global Battlefield, was published by Alfred A. Knopf in 2017. His second book, Future Peace, will be published by the University of Notre Dame Press.

Patrick J. McCloskey is the Director of the Social and Ethical Implications of Cyber Sciences at the North Dakota University System and serves as the editor-in-chief of Dakota Digital Review. Previously, he served as the Director of Research and Publications at the University of Mary and editor-in-chief of 360 Review Magazine. He earned an MS in Journalism at Columbia University’s Graduate School of Journalism. McCloskey has written for many publications, including the New York Times, Wall Street Journal, National Post and City Journal. His books include Open Secrets of Success: The Gary Tharaldson Story; Frank’s Extra Mile: A Gentleman’s Story; and The Street Stops Here: A Year at a Catholic High School in Harlem, published by the University of California Press.