Since the launch of ChatGPT in November 2022, conversations around artificial intelligence (AI) have exploded. Experts and non-experts alike are making predictions, mostly world ending and societally destructive. Even some of the biggest names in technology are calling for a pause in AI development and clamoring for regulation due to potential doomsday scenarios.

In future articles, I will discuss reasonable doomsday possibilities, but here I dispute the growing fear that AI will take our jobs and force everyone onto a Universal Basic Income. By looking at history and the evolution of the workforce over the past several decades, I’ll show that we will continue to have paid, productive careers.

What is AI & Why the Controversy?

AI concepts began in 1936 with the Turing Machine, the first mechanism to simulate human actions. Yes, it was revolutionary, but only a first step. In 1956, the term “artificial intelligence” was coined by John McCarthy, a pioneer behind what is widely considered to be the first functioning AI program, which was very simple and not intelligent. The explosion of science fiction, starting in the 1950s, dreamed of AI possibilities that technology couldn’t yet match. An avid television watcher could be forgiven for believing that every computer in the world was evil and dreamed of taking over the world and killing its human oppressors. Many children of the 1980s fully expected that, by 2023, we would see Robocop fighting the Terminator in the streets. Sci-fi in the 1990s portrayed intelligent machines enslaving the entire human race to turn us into batteries.

Hollywood seldom depicts beneficial AI. Mr. Data from “Star Trek: the Next Generation” and Kit from “Nightrider” are among the few intelligent machines that don’t want to murder us all. It is no wonder that most people are afraid of technology.

While Hollywood made movies such as “WarGames,” in which Joshua, an intelligent computer, planned to nuke the entire world simply to win a war game, real-world computers were vastly disappointing in comparison. Unable to interrupt spoken language or even hold enough information to take a decent photo, the real machines of the 1980s and 90s were good only at quickly sorting and organizing data—a long way from intelligence.

From the 1930s through 2000s, hardware systems became hugely more capable, following Moore’s Law of doubling processing speed about every two years. However, the software was not comparable to anything we perceive as intelligent. Computers impressed the world with games such as chess, becoming world champion in 1997 with IBM’s Deep Blue. But the machine wasn’t doing critical thinking. The software was an optimization engine, meaning it ran all possible outcomes for every move on the board in its memory and selected the most probable outcome. Regarding “intelligence,” this was akin to a magician making a rabbit disappear by removing the light shining on the hat. The rabbit looks like it disappeared but is still in the hat. Similarly, Deep Blue looked like intelligence, but the young AI was just performing fast calculations with the potential for 10111 board moves: insurmountable for a human but possible for a computer.

Fourteen years later, in 2011, IBM’s Watson won the TV game show “Jeopardy,” marking a true leap forward in software’s capability to do intelligent tasks that weren’t merely crunching numbers faster than humans. While extraordinary, this was still a simulation of, rather than, real intelligence.

AI, by its nature, simulates intelligence, which often tricks people into believing a true intellect is at play. Modern AI can learn from experience, use that learned knowledge to draw conclusions, identify subjects in pictures, solve complex problems, understand different languages and create new concepts. While these appear intelligent from a distance, closer examination shows that the software is meeting a fit-for-purpose design, built to be exceptional at managing data and tasks that are commodity (identical in nearly every situation) and so repeatable, predictable, mundane and boring.

This sort of work is the essential reason computers exist, and why the world is putting so much effort into developing AI technology. Most people do not fully understand nor appreciate how much of our work in every industry can be described by these five words, making it the perfect candidate to be replaced by AI.

AI & Workforce

Populations in developed countries are in serious decline. Although the American fertility rate has dropped to 1.78—below the demographic replacement rate of 2.1—it is much better than other industrialized nations. Japan, for example, is currently projected to fall to half their current population by 2100, while China will likely have fewer people of working age than the U.S. by 2045.

The workforce consequences are profound. In the U.S., 47 states currently maintain unemployment rates at or below 4 percent, which is considered “full employment.” Every state is aggressively recruiting workers in fields such as healthcare, agriculture, high technology and other industries requiring polytechnic skills. The production requirements of our businesses and services nationwide far outstrip our capacity. States such as North Dakota have endured workforce crises since 1970, seldom able to attract needed talent. Today, with an unemployment rate less than 2 percent, almost all organizations are recruiting. AI offers a solution—not to replace workforce but augment it as a tool.

This is where AI transitions from being the “big scary” to being one of the only true hopes for our massive workforce gap issues here and worldwide. Most folks can appreciate tool augmentation in manufacturing. Consumers, whether shopping for cars or pencils, need high levels of consistency and quality, which AI provides by increasing reliable precision in fabrication.

Manufacturing jobs have slowly been replaced by automation since the 1980s. From 1990 to 2000, manufacturing jobs fell from about 19 to 15 million, while counterintuitively salaries increased by 18 percent. Automation eliminated lesser skilled tasks, thereby pushing the remaining productivity to higher labor values.

In healthcare, the U.S. currently has severe personnel shortages in all aspects, including doctors, pharmacists, anesthesiologists, and we lack several hundred thousand nurses. We aren’t teaching enough clinicians for the demand.

While every patient has a unique condition(s), many aspects of care are very similar from plan to plan. Here AI already excels at utility and additive value, for example by personalizing treatment plans and remotely monitoring individual patients, analyzing entire population groups, optimizing medical tests, operating preventative care programs, administering medications and providing robotic care assistants.

One of healthcare’s biggest concerns is the application of medications. Errors can cause serious side effects for patients, up to and including death. In fact, medication mistakes impact more than seven million American patients yearlyi and cause between 7,000 and 9,000 mortalities.ii Even a non-emergency medication error costs about $4,700 and increases the patient’s hospital stay by 4.6 days.iii AI-enabled medication verification systems ensure the patient receives the correct drug (taking allergies into account), dose, time and correct route (the location of medication administration, such as oral or injection). Several such systems have been deployed recently in medical centers nationwide and are expected to achieve a tenfold error reduction compared to manual systems.

AI is also greatly accelerating drug design using genomic data and then speeding up trial diagnostics and testing, as well as improving inventory management and claims processing.

AI Increasing Value, Lowering Costs

Every other industry and major organization is using AI now to lower costs and increase value. Governments employ AI to manage forms and process citizen requests quicker. Robotic Process Automation, which automates routine office tasks, is now in use in almost every state government. While the deployments are currently limited, in 2017 the Brookings Institute published that 45 percent of all work in government could be automated—using technology that was available five years previous.iv

Today’s AI is much more effective in comparison, showing that AI can automate much more bureaucratic workload, rendering government services far more efficient and responsive at greatly reduced cost.

The energy industry uses AI to manage power loads. Every day, multiple power companies across the country have AI systems that ingest terabytes of data about energy utilization and then distribute power accordingly to avoid spikes and outages. This decreases maintenance costs across the distribution ecosystem and lowers energy costs. AI also helps to balance environmental concerns with energy generation. This improves the ability of a power company to manage both generation and transmission while meeting environmental goals.

Retail businesses use AI to streamline checkouts and ultimately enable you to simply walk into a store, fill your cart and walk out. No checkouts, ever. AI also helps retailers understand where to physically put products in stores and how to provide more accurate advertising.

National defense is completely dependent on AI today. For several years, our cybersecurity defense has been driven by AI all day every day, fending off vast numbers of attacks from enemies around the globe. In North Dakota, government agencies, including the military, ward off 4.5 billion (yes, billion) attacks per year from state actors such as North Korea, Russia and China. That’s about 1,100 per attacks per minute against our state’s systems. North Dakota’s cybersecurity ranks among the most advanced anywhere, defending against 99.999999 percent of attacks through automated tools leveraging AI.

Agriculture is also using AI every day to forecast the weather and for what is now called precision agriculture. This involves AI-enabled photography and other sensors on farm equipment, as well as drones and satellites, to more accurately spread fertilizer, spray weeds, match plant species with soil, decrease chemical and topsoil runoff into streams and rivers, and optimize data input for crop insurance. In short, AI greatly helps farmers increase crop yields with less water, fertilizer and chemical usage at less cost and significant benefit to the environment.

What impact will all this automation have on the workforce? What has it done already over the past 10 years of implementation? It has freed people from commodity, repetitive, predictable, mundane, boring work and let them focus on work of higher value to their companies and themselves.

Jobs Lost but Bigger Gains

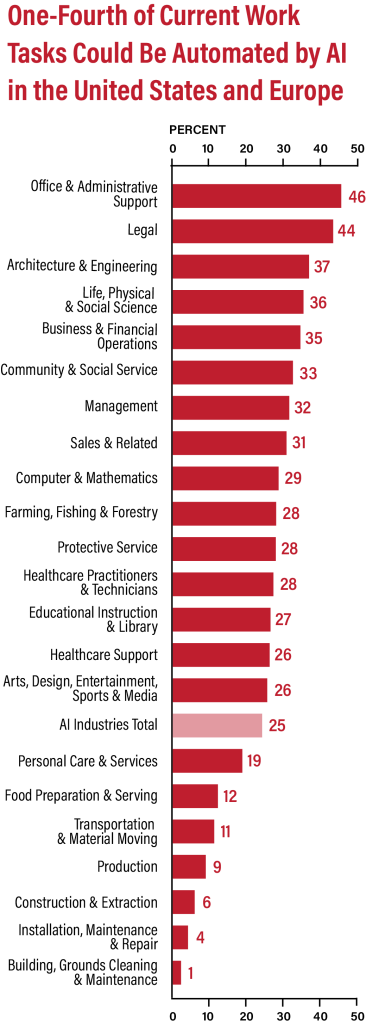

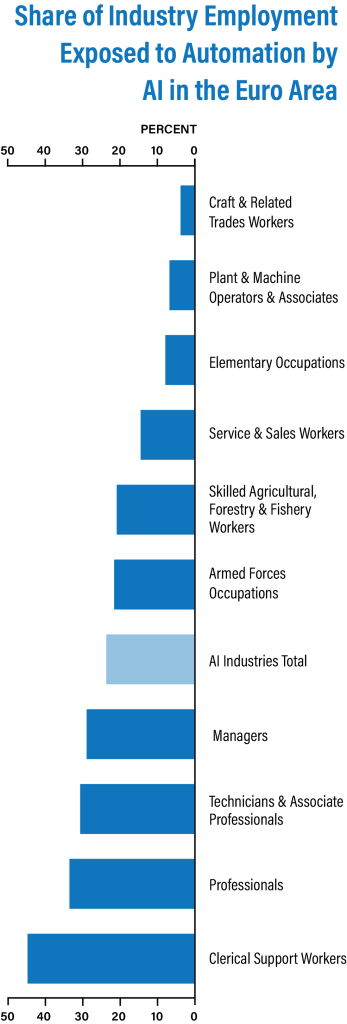

Many media pundits and industry experts say that AI progress will go from being an enabler into an economy destroying system. Goldman Sachs predicts that 25 percent of work tasks will be automated by AI, and almost the same proportion of jobs will be replaced.

Will there be a disruptive period of change? Yes, absolutely, and change doesn’t come without pain. But ultimately, history has demonstrated the world will be more productive, and standards of living will increase.

An MIT study of automation showed that from 1947 through 2016, 32 percent of all jobs disappeared but had a reinstatement of 29 percent. “Reinstatement” is a term for jobs created from changes in process. While a large number of jobs were eliminated, new jobs sprung up around the new capabilities.

The ability to build homes to immense skyscrapers grew exponentially once we stopped employing thousands of workers with shovels and instead used bulldozers and other equipment. What happened to the displaced workers? Did they just sit down and die? No, they learned to drive bulldozers or otherwise reskilled. Our education system adapted to the changing times. No one now would want to return to the days of outhouses, medical care via leeches and candles for lighting. Technology completely transformed the world then as AI is doing today.

According to the U.S Bureau of Labor Statistics, the percentage of low-skilled workers has dropped 5 percent just in the past 10 years.v At the same time, we have seen unemployment continue to drop under 4 percent (common considered full employment) and the workforce participation rate.

History Says People Figure It Out

Many experts predict that accountants will be one of the first white-collar jobs to be fully automated. Perhaps, but I predict that accountants will be able to shift into a new collection of jobs. Each of these positions, in this illustration, requires skills that are very similar to those of an accountant. Relatively minimal retraining will be needed and, like other white-collar workers, former accountants will thrive in this evolving AI economy.vi

The growth of computer and internet use since 1995 has completely changed the way we work. App developers didn’t exist in 2007. Data Scientists, social media managers, automotive robotic technicians and many other occupations were created only a few years ago. The top-10 fastest growing and most well-paid jobs today didn’t exist a decade ago. AI will continue this positive revolution and growth, which includes the continuing disappearance of jobs that no one wants to do because they are boring and don’t make people feel good about themselves. In the end, mundane work will be done by computers. You will be freed to pursue more interesting and higher valued careers.

While 10 years ago, data barely existed on AI jobs in the U.S., today there are almost 800,000 open, AI-related jobs across the country,vii most of which didn’t exist five years ago. While it is true that AI will continue to encroach on more jobs over the next decades, employees who engage in continuous learning and adopt a growth mindset will always be in demand.

In 2018, McKinsey & Company, a leading global management consulting firm, predicted that AI will add $13 trillion to the world economy by 2030.viii In my opinion, based upon the last two years of observations from companies Bisblox works with, AI will likely add closer to $25 trillion to the global economy by 2030. AI is leading to vastly higher productivity in a world with a shrinking workforce. This will result in better paying and far more engaging careers for everyone willing to continue learning and adapting to the changing AI economy.

Over the long term, however, government is the primary caveat that might push AI away from empowering people and towards becoming an authoritarian oppressor. Rather than protecting people, governmental regulations often enable big corporations to maximize profits and control, and this could completely upset free markets and ultimately destroy jobs instead of creating them. ◉

References

i Journal of Community Hospital Internal Medicine Perspectives, 2016: https://pubmed.ncbi.nlm.nih.gov/27609720/ ii NCBI, 2021: https://www.singlecare.com/blog/news/medication-errors- statistics/ iii Thibodaux Regional Health System, 2023: https://www.thibodaux.com/ about-us/quality-initiatives/successes/bedside-medication-verification/ iv Brookings, 2017: https://www.brookings.edu/blog/up-front/2022/01/19/ understanding-the-impact-of-automation-on-workers-jobs-and-wages/ v Bureau of Labor Statistics, 2023: https://data.bls.gov/cew/apps/data_views/ data_views.htm#tab=Tables vi Bisblox, 2023: https://www.bisblox.com/ vii Visual Capitalist, 2023: https://www.visualcapitalist.com/top-us-states-for- ai-jobs/ viii McKinsey, 2018: https://www.mckinsey.com/featured-insights/artificial- intelligence/notes-from-the-AI-frontier-modeling-the-impact-of-ai-on-the- world-economy

Shawn Riley, Bisblox

Shawn Riley served as the Chief Information Officer for the State of North Dakota and as a member of Gov. Doug Burgum’s cabinet from 2017 through 2022. Riley cofounded Bisblox, North Dakota’s first Venture Studio, in 2022, where he serves as Managing Partner. Bisblox uses a proprietary Start-Run-Grow-Transform model to help founders/entrepreneurs build companies in the Americas and Europe. Previously, Riley served as the Chief Information Officer of Austin and Albert Medical Centers, Information Management Officer of the Mayo Clinic Health System, and Executive of Technology Operations Management at Mayo Clinic. Riley earned a BS in Technology Administration from American Intercontinental University, and an MBA and MHA from Western Governors University.