The integration of artificial intelligence (AI) into education has unlocked powerful tools and possibilities, while also revealing challenges that threaten the integrity of genuine learning.[i] As an educator, I have seen a growing pattern over recent semesters in which diligent students engage deeply with course materials, absorbing complex ideas, asking questions and developing a true understanding of the subject matter. Such students, intent on building their knowledge and skills, approach AI tools with caution, avoiding them as shortcuts and seeing the value of effort in the learning process.

However, a contrasting group of students—those less invested in learning—have embraced AI tools as a way to complete assignments with minimal effort, relying on AI-generated answers that, while well-structured and grammatically sound, fail to engage with the substance of the assignment.[ii] This discrepancy has raised critical questions about the role of generative AI, such as ChatGPT, in the classroom and how its misuse may impact[iii] both educational and societal outcomes.

AI’s Attraction for Students

Some students find AI’s capabilities particularly appealing as it offers a way to produce polished work with little effort. Faced with a challenging assignment, these students enter prompts into AI tools, accept the generated output as their own and submit it without much thought or revision. While these responses may appear articulate,[iv] they often lack depth and relevance, ignoring the analytical or critical thinking elements that the assignment demands. This practice reduces assignments designed to stimulate learning into formulaic, surface-level submissions. Rather than engaging with the material, students bypass the intellectual process altogether, replacing it with AI-generated content that creates an illusion of competence.

In this way, AI becomes less a tool for support and more a mechanism that masks a lack of true understanding, undermining the educational process and leaving students unprepared for more demanding intellectual tasks.

Below is an assignment from a senior-level, business strategic-management class. The class exposes students to various concepts in strategic management, which teach students why and how Fortune500 companies exist and compete within their industries. Over the course of the semester, students learn concepts such as corporate governance, mergers and acquisitions. The course assigns each concept into a unit, and all the units provide students with a complete syllabus. As part of each unit, the instructor assigns students with weekly prompts that students must answer in the form of discussion posts. The students post their answers to the prompts on the course website for the instructor and other students in the class to read, reply to and comment on. The purpose of this assignment is to provide students with an opportunity to demonstrate their understanding of that week’s concept.

On average, it would take a student a minimum of 30 to 45 minutes to work on an answer to a prompt, such as the one below. This includes reading the question, thinking about his or her answer and typing it out. At first glance, the answer below appears to be well-structured. However, upon closer reading, the content is not related to the prompt, even though it touches on the subject matter. The answer was generated in two seconds by a generative AI program, ChatGPT-4.

Instructor Assigned Prompt, Written by the Instructor:

In this section we learned that firms combine resources (tangible and intangible) to form capabilities and over time, capabilities become core competencies provided they satisfy the four criteria of sustainable competitive advantage (Valuable, Rare, Costly to Imitate, Non-Substitutable).

Choose a company you are a stakeholder of.

In your informed opinion, what is one core competency your firm possesses? Why do you think it’s a core competency?

Generative AI Answer (ChatGPT-4):

One company I am a stakeholder in is Chase Bank. One of its core competencies is its ability to offer a wide variety of financial services, including retail banking, commercial banking, and investment services, all under a trusted and well-known brand. This comprehensive offering allows Chase to meet the diverse needs of its customers. To identify its internal strengths and weaknesses, Chase, like many companies, conducts internal audits, performance reviews, and market analysis. Strengths, such as its wide reach, strong brand reputation and diversified financial services, are identified through these processes. Weaknesses may include issues such as past reputational damage or operational inefficiencies, which are highlighted through regular assessments.

It is vital that managers have a clear understanding of their firm’s strengths and weaknesses to ensure they can leverage advantages and address shortcomings effectively. For example, Chase managers need to build on their extensive network and trusted brand while addressing any areas of risk, such as improving internal controls to prevent further reputational harm. This understanding helps in making strategic decisions that foster long-term growth and competitiveness in the financial services industry.

In contrast, a competent answer by a diligent student to the above prompt would read:

As a customer of Target in Fargo, I believe its core competency lies in its private-label brands, such as Good & Gather for groceries and Threshold for home goods. These brands offer a blend of quality, affordability and style that are distinctly associated with Target and have become integral to its appeal among local consumers.

Target’s use of its private-label brands qualifies as a core competency, which satisfies the four criteria of sustainable competitive advantage. First, the private-label brands are valuable because they enable Target to meet the demand for affordable, high-quality products without relying on third-party suppliers, ensuring better margins and consistent quality.

Second, no other retailer in Fargo offers a similar breadth of exclusive private-label products that combine functionality with a modern aesthetic. Walmart and Costco, for instance, have their own private labels, but these chain stores lack the same focus on design and lifestyle integration that Target’s brands deliver.

Third, Target’s private-label brands are costly to imitate. Costco and Walmart would need to invest significant resources to imitate these brands. Developing a suite of private-label products that resonate with consumers across multiple categories, from food to furniture, would require significant investment in supply chains, product design and brand development.

Finally, Target’s private label brands are non-substitutable because while other retailers sell similar products, the unique combination of price, quality and branding that Target’s private labels provide cannot be replicated elsewhere. Also, during the winter months, its seasonal displays feature not just national trends but practical cold-weather items that resonate with the realities of living in Fargo. This uniqueness is difficult for competitors to mimic because it requires a deep understanding of consumer trends, robust supplier relationships and significant branding expertise. Walmart and Costco, while offering their own private labels, tend to focus primarily on functionality and cost-efficiency, lacking Target’s emphasis on design and lifestyle appeal. Moreover, Target’s private labels benefit from a cohesive branding strategy that integrates in-store presentation, online marketing, and product packaging in a way that reinforces their premium yet affordable identity. This holistic approach creates a distinct value proposition that other retailers cannot easily substitute.

Consequences for Learning & Classroom Dynamics

The misuse of AI in the classroom creates a distinct divide between those who approach learning with genuine effort and those who do not. Diligent students, who dedicate themselves to understanding and applying course concepts, may feel demoralized upon seeing peers achieve comparable or even higher grades by using AI to sidestep effort.

For these engaged students, the devaluation of authentic work can create a sense of futility and frustration, especially when AI-generated work receives similar or greater recognition.

This misuse also complicates the assessment process. As an educator, grading becomes challenging when well-structured, yet superficial content must be distinguished from thoughtful, authentic submissions. For example, one student shared that, “I don’t use AI in my writing because I think of it as a form of cheating, and it’s disheartening when other students don’t put in the effort yet are graded the same.”

Without a clear way to assess the integrity of each assignment, academic standards risk being compromised, making it difficult to reward the students who genuinely strive for understanding.

Ultimately, AI misuse jeopardizes the core purpose of education, which is to develop independent thinkers capable of tackling real-world problems with analytical rigor.

Implications for the Workplace

The reliance on AI in academic work has implications that extend well beyond the classroom, foreshadowing issues in the workforce, into which today’s students will soon enter. Students who depend on AI to complete assignments without building critical thinking and problem-solving skills will struggle to adapt to professional environments that demand these abilities.

In the workplace, employees must not only generate ideas but also analyze information, devise strategies and solve complex problems—all of which require skills that AI use without discernment fails to develop. Employers may increasingly encounter graduates who can produce polished work yet lack the ability to make informed, strategic decisions independently. This dependency on AI will stifle innovation, reduce productivity, and weaken an organization’s ability to grow and adapt.

Additionally, individuals without foundational skills in their respective fields will contribute less effectively to team efforts, ultimately undermining the organization’s success and long-term sustainability. This will force employers to look elsewhere for talent or revisit the feasibility of entire positions within organizations.

Broader Societal Consequences

On a societal scale, the erosion of critical thinking and analytical skills due to AI misuse will create a generation unprepared to navigate the complexities of an information-driven world. As more students avoid intellectual rigor, society risks a decline in well-informed citizenship, ethical reasoning and active engagement with critical societal issues.

The public’s confidence in professionals will also suffer if the public perceives expertise as shallow or artificially created through technology. Society increasingly expects authenticity, reliability and trustworthiness from its leaders, professionals and experts, and a reliance on AI will erode these expectations.

If AI-generated responses replace genuine expertise, a broader societal skepticism will likely emerge, reducing social cohesion, fostering cynicism and weakening collective decision-making processes. AI misuse, therefore, poses a risk not only to individual careers but to the foundations of social trust and shared knowledge, both of which are essential for societal progress and cohesion.

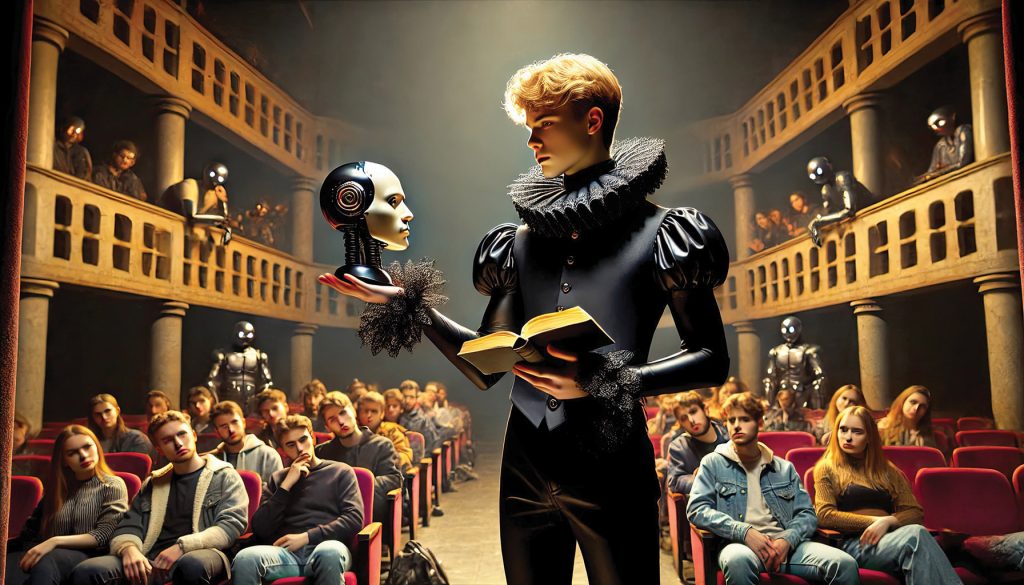

To be or not to be—a student—that is the question:

Whether ‘tis nobler in the mind to suffer

The slings and arrows of outrageous effort,

Or to take up ChatGPT, Grok 3 and Dall-E …

Ay, perchance there’s the cheater’s rub …

– WILL E

Solutions for Responsible AI Use in Education

Addressing the misuse of AI in the classroom requires a proactive, multi-pronged approach that guides students toward responsible use, while highlighting the importance of authentic learning. Educators can play a crucial role by incorporating AI literacy into the curriculum,[v] teaching students not only how to use AI responsibly but also the ethical considerations involved in its application. Designing assignments that demand personal reflection or in-class work can make it more challenging for students to rely solely on AI, encouraging them to think independently.[vi]

Institutions must also establish clear guidelines on the acceptable use of AI, leveraging detection tools to identify AI-generated work and ensure academic integrity.

Unlike traditional plagiarism, which entails the unauthorized use of another person’s work or ideas, the misuse of AI introduces a distinct ethical challenge. In this case, students submit content generated by machines as if it were the result of their own intellectual effort. While the content may technically be original, it lacks the critical engagement, analytical thought and the personal investment that education seeks to cultivate. The issue lies less in the theft of ideas than in the evasion of the learning process.In bypassing the effort required to understand and grapple with the material, students compromise their intellectual development and diminish the core purpose of education: fostering independent thinking and genuine mastery of knowledge.

Ultimately, creating a culture that values diligence and genuine learning involves more than rule-setting; it requires actively recognizing and rewarding students who engage thoughtfully with their education. By encouraging self-directed learning and offering support to those who may struggle, educators can reduce the appeal of AI as a shortcut, helping students see the value of knowledge gained through their own efforts.

The misuse of AI in education poses significant challenges to the foundational goals of academic institutions, threatening to produce a workforce unprepared to address the complex challenges of the real world.[vi]

The classroom has long stood as more than a space for acquiring knowledge; it is a crucible for character, discipline and the work ethic that drives meaningful achievement. With the growing presence of AI, however, we face a critical moment of reckoning. This technology, powerful and transformative, holds the potential to enrich education, but its misuse threatens to reduce learning to a shallow transaction. When students bypass effort and rely on AI to produce answers, the classroom loses its role as a training ground for the qualities that define exceptional leaders and thinkers. The consequences extend beyond academic dishonesty; they strike at the very culture of hard work that fuels innovation, resilience and societal progress.

Work ethic is not a relic of the past—it remains the engine of success in an increasingly complex world. Yet, if students avoid grappling with ideas and the discomfort of intellectual struggle, they risk losing the grit required to persevere in the face of challenges. Without this foundation, they may graduate into a world that rewards adaptability and critical thinking but find themselves ill-prepared to meet its demands. Leaders who fail to think deeply cannot inspire, and innovators who avoid effort cannot create. A culture that celebrates shortcuts over determination risks producing individuals who crumble when confronted with adversity, rather than resilient problem-solvers.

Education must rise to this challenge, not as an opponent of AI, but as a steward of its responsible use. The answer lies not in banning technology but in redefining its role. AI should serve as a tool that amplifies curiosity and sharpens understanding, not as a crutch for evading hard work. Classrooms must emphasize effort as the path to growth, reminding students that the process of learning—mistakes, revisions and moments of clarity—is where true value lies. Teachers, institutions and students must work together to cultivate a mindset that prizes persistence over shortcuts and sees diligence as a lifelong character asset.

The stakes have never been higher. Will we foster a generation defined by intellectual rigor and creativity? Or will we allow the allure of convenience to hollow out the essence of education? The choice is ours, and the answer will shape not just the future of classrooms but the future of humanity. The values we instill today—curiosity, integrity and the dignity of effort—will determine whether AI becomes a force for enlightenment or a tool for stagnation. The path forward is clear: We must champion a culture where hard work remains the cornerstone of progress, ensuring that education prepares students not just to succeed, but to lead with resilience and purpose.

The classroom has always been a proving ground for the skills and integrity that shape the future. With AI now a powerful, ever-present force, its misuse threatens to redefine education into something transactional and hollow.

But the stakes go far beyond the classroom: What kind of leaders, innovators and thinkers will emerge if we allow shortcuts to replace struggle? If students fail to engage deeply with ideas, they risk forfeiting not only their intellectual independence but also their ability to navigate a world that demands resilience, creativity and moral clarity.

Education must not yield to convenience. Instead, it must rise as a guardian of thought, challenging students to use AI as a tool for empowerment rather than an escape from effort. The questions before us are stark: Will we allow technology to erode the very qualities that make us human? Or will we harness its potential to create a generation defined by curiosity, ingenuity and courage? ◉

[i] Guardian Staff. (2024, July 31). “Universities Australia tackles cheating issues with AI and ChatGPT.” The Guardian. Retrieved from https://www.theguardian.com/australia-news/article/2024/jul/31/universities-australia-cheating-issues-ai-chatgpt

[ii] Times Higher Education. (n.d.). “Using AI to elevate academic integrity and excellence.” Retrieved from https://www.timeshighereducation.com/campus/using-ai-elevate-academic-integrity-and-excellence

[iii] Times Higher Education. (n.d.). “Speaking with students about how to use AI ethically.” Retrieved from https://www.timeshighereducation.com/counsellor/sector-trends/speaking-students-about-how-use-ai-ethically

[iv] Forbes Technology Council. (2024, June 27). “Why generative AI threatens examination integrity, creativity, and learning. Forbes.” Retrieved from https://www.forbes.com/councils/forbestechcouncil/2024/06/27/why-generative-ai-threatens-examination-integrity-creativity-and-learning/

[v] Entrepreneur Staff. (2024). “Did ChatGPT write that paper? 4 words make it easy to tell.” Entrepreneur. Retrieved from https://www.entrepreneur.com/business-news/did-chatgpt-write-that-paper-4-words-make-it-easy-to-tell/473651

[vi] Castelvecchi, D. (2024). “ChatGPT and the ethics of AI in scientific research.” Nature. Retrieved from https://www.nature.com/articles/d41586-024-02371-z

[vii] Inside Higher Ed. (2024, July 2). “Ways to distinguish AI-composed essays from human-composed ones.” Retrieved from https://www.insidehighered.com/opinion/career-advice/teaching/2024/07/02/ways-distinguish-ai-composed-essays-human-composed-ones

Ahmad Al Asady, PhD, Assistant Professor of Management, Challey Institute, NDSU

Ahmad Al Asady, PhD, is an Assistant Professor of Management and Challey Institute Faculty Scholar at NDSU’s College of Business. Professor Al Asady’s research focuses on understanding how national institutional environments, including laws and regulations, as well as culture and other informal social factors influence international entrepreneurship and its success. His research has been published in international peer-reviewed outlets, such as International Small Business Journal and Entrepreneurship Theory and Practice. As an educator, Professor Al Asady is passionate about training the next generation of society’s leaders and problem-solvers. He earned his Bachelor of Administrative Studies (BAS) in General Management at York University, and his MA in Teaching English as a Second Language (TESL).