Competition for the increasing limited supply of those with skills is driving up wages, which is an obvious benefit for the employee, but is also inflationary for the employer’s product or service. And it doesn’t increase the supply until more people are attracted to those trades, and then not until they’re trained. Thus begins a boom for skills training.

While popular media focuses on “higher ed” issues, the long-ignored challenges are in expanding the availability of schools that teach skills associated building, maintaining and operating the essential physical infrastructures of our society, from highways to hospitals, and from semiconductor fabs (fabrication plants) to the shale fields. These are all the kinds of skilled jobs that were labeled “essential” in the months of the Great Lockdowns. They’re also often the “dirty jobs,” as TV’s Mike Rowe, the champion of such work, labeled them. They’re the kind of jobs that require people to show up, to be hands-on.

As everyone knows, in the normal course of history’s progress, the nature of work is always changing. Many specific kinds of skills that were essential in the past are no longer needed. Different types of work emerge as the structures of industry and service change with society. Some 60 percent of the jobs that existed as recently as 1960 no longer exist as forms of employment. There is nothing new about the idea that this shifting landscape requires workers to upskill or reskill, to earn new knowledge and often formal certifications from schools of “continuing education.” What is new is that, for the first time, the source of much of the current workplace disruption, the Cloud, is simultaneously enabling better means for all the reskilling it necessitates.

In the face of an economic slowdown, or even the possibility of a recession, America still has a shortage of skilled labor in every domain from home construction to the great shale oil fields, as in the Bakken.

The domain of skills, and learning them, divides neatly into two camps. There are skills that are essentially informational, and those that are, literally, hands-on. The former involves understanding ideas—specific regulations, permissions, safety standards, associated with, say, driving an excavator at a construction site. Such knowledge can be acquired without setting foot in an excavator. But learning how to operate the excavator itself requires hands-on training.

Much has been made over the differences between learning in these two domains in terms of how much computers can help. Some three decades ago, leading computer scientists observed what is now often called “Moravec’s Paradox” (named after Hans Moravec): the irony that it’s easier to teach a computer to play chess than, say, fold laundry. It’s ostensibly a paradox because the former is referred to as a “high-level” task, whereas the latter is “low-level.” This sort of hierarchical categorization, however, fails to recognize that physical tasks entail an exquisitely complex integration of— high-level—human sensory capabilities, neuro-motor skills and reasoning. To put somewhat facetiously: It’s the difference between teaching children to spell “excavator” and teaching them to operate one.

Cloud Democratization of AI

We’ll come back to the physical skills. The revolution in learning non-physical skills is not that they can be taught online or remotely. That’s been possible for quite a while, whether through TV, VCR, audio tapes or even radio. It is, of course, meaningful that remote training can now scale up rapidly, along with the Cloud’s infrastructure. But the unique distinction of future developments will be the Cloud’s democratization of AI, which will enact a different kind of disruption to teaching informational skills.

Looking at the changes in information since 1970, Massachusetts Institute of Technology (MIT) economists David Autor and Anna Salomons documented the shift in the structure of employment and, specifically, the hollowing out of highly paid “middle-skilled” jobs that typically don’t require a college degree.[1]Autor, David, and Anna Salomons. “New Frontiers: The Evolving Content and Geography of New Work in the 20th Century.” NBER Economics of Artificial Intelligence, May 2019. … Continue reading The two general categories of such middle-skilled jobs that faced the greatest declines were physical operations and office administration. Physical operations were hollowed out mainly because of machine automation and industrial outsourcing. The decline in administrative employment was caused by the kinds of software that emerged in the late 20th century—word processing, filing, mailing, drawing, printing and spreadsheets—shifting clerical tasks away from middle-skilled employees to the desktops of professionals. AI will do the inverse with many of the “higher-order” skills currently in the domains of the professional class.

Up until now, analytical software tools have typically focused on the collection, storage and presentation of data, and have required fairly sophisticated training and education to operate. AI pattern-recognition and advice-giving—including using real-time simulations and “virtual twin” models—now routinely assist the professional manager. But as those AI tools become more intuitive, that advice can be delivered directly to the “middle-skilled,” non-college-educated employee too.

Managers and engineers are deluged with data about myriad factors relating to operational efficiency (and safety): sources, quantities, changes in location or composition of inputs, suppliers, and market dynamics. Recognizing patterns in all the information is what constitutes most daily operational decisions. But it’s in precisely these kinds of areas of complexity where AI can advise and even automate, looking for the “signal in the noise.” Such information automation pushes the ability for such decision-making out to the front lines of a factory floor or hotel front desk in the form of “virtual” assistants that “upskill” the capabilities of non-management employees.

Thus, a key feature of AI is found not in those “intelligent machines” necessarily making autonomous decisions, the feature that causes so much anxiety among prognosticators, but instead in its ability to provide informed advice with a “natural language interface” that requires neither programming skills nor special expertise. Such AI-enabled operational guidance, “intelligent digital assistants,” can operate in real-time on those front lines, whether it involves machinery or supply chain decisions that entail considering hidden complexities formerly the purview of the management class. And that AI-driven guidance and advice will be delivered, increasingly, not only in natural language but also in augmented (AR) and virtual reality (VR) interfaces.

Software in the pre-AI era led to tools such as Computer Aided Design (CAD), which mainly helped engineers in their work and eliminated the need for draftsmen. In the AI-enabled world, engineering design and even some professional aspects of manufacturing will shift to the employees doing the work rather than those managing the work. The same dynamic is coming to the IT world itself. As with manufacturing, Computer Aided Software Engineering tools have been around for many decades. But now we have AI tools that allow “programming without code.” In other words, coders are working to put other coders out of a job by creating Cloud-based tools that a nonexpert can use to create software.[2]Caballar, Rina Diane. “Programming Without Code: The Rise of No-Code Software Development.” IEEE Spectrum, March 11, 2020. … Continue reading

Big tech companies such as Oracle, Salesforce, Google and Microsoft, as well as numerous startups, are in a race to produce ever-simpler “no-code software” tools, with which customers can use natural language, intuitive graphics and interfaces to write code without knowing a jot of it. This doesn’t signal the end of coding as a profession any more than automation signaled the end of farming or construction jobs. But it does signal that coders at or above the college level will continue to be a small fraction of the share of people employed overall. Today, roughly as many software engineers exist as do people employed on farms or construction sites. Odds are good those will all remain niche occupations over the coming decade. More importantly, the democratization of software-creation will accelerate the nonexpert use of AI-enhanced tools in every profession.

Farmers, as it happens, are ahead of the curve in this trend (as they were with industrialization). Not only is farm equipment now often autonomously navigated, but decisions farmers make about what and when to plant, irrigate and fertilize are all delivered on-site with real-time data and analytic advice from Cloud-centric, AI-driven software. Another implicit bellwether of this trend was when UPS, in late 2020, offered early buyouts to many management employees while simultaneously hiring 100,000 workers for the holidays.[3]Ziobro, Paul. “UPS Offering Buyouts to Management Workers.” The Wall Street Journal, September 17, 2020. https://www.wsj.com/articles/ups-offering-buyouts-to-management-workers-11600378920 The net effect of all this real-time “upskilling” of the nonexperts will “hollow out” many jobs formerly reserved for those classified as professionals. More efficient. More production. And more disruption.

Driving Excavators & Exoskeletons

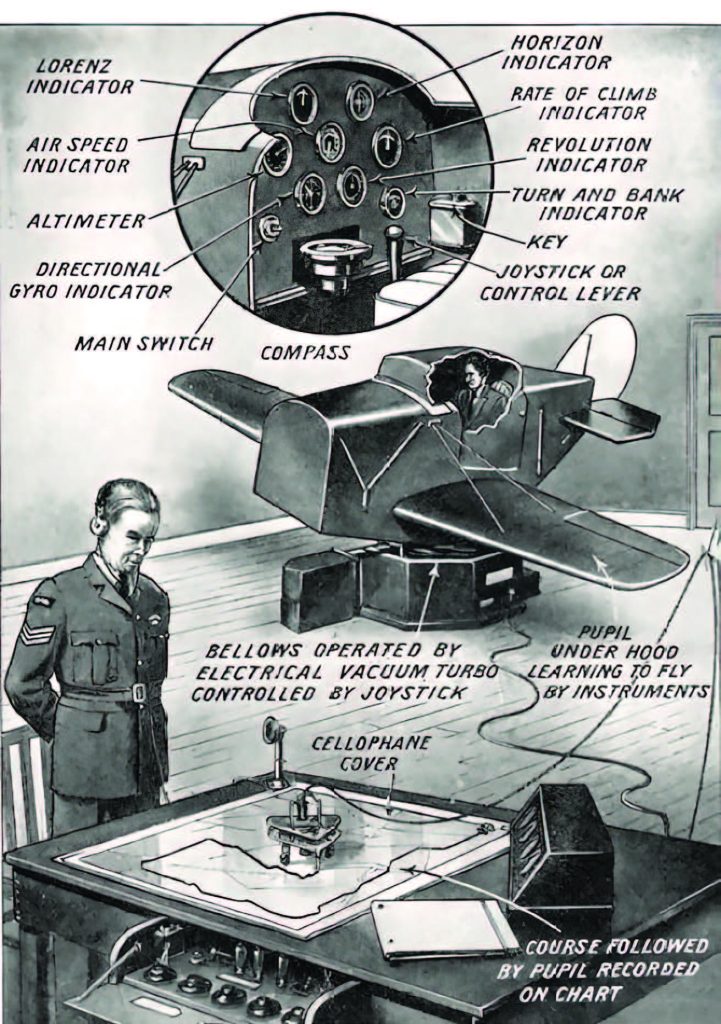

We owe to a high-school dropout the idea of using a simulator to help learn the skills of operating complex or dangerous machines. Edwin Link sold his first aircraft flight simulator in 1929. It was the first example of useful “virtual” reality, an idea Link concocted because of his love of flying—he purchased Cessna’s first airplane—and his understanding the risks of learning how to fly.[4]McFadden, Christopher. “The World’s First Commercially Built Flight Simulator: The Link Trainer Blue Box.” Interesting Engineering, August 21, 2018. … Continue reading Accidents and fatalities were notoriously high in those early days of commercial aviation.

During World War II, Edwin Link’s AN-T-18 Basic Instrument Trainer was standard equipment at every air training school in the United States and Allied nations.

Link’s eponymous machine would prove critical for training thousands of pilots in World War II. A complete aircraft cockpit (i.e., no wings or fuselage, etc.) with instruments and controls that responded to the pilot by moving on hydraulics created the illusion of flying and thus allowed development of the necessary reflexes. (Early on it was far from as realistic as today’s simulators, but it was good enough to see a dramatic decrease in accidents from novice pilots.) His company still exists today: Via a number of different acquisitions, it is now part of defense contractor L-3 Technologies. And today’s technology, while profoundly more sophisticated, differs little in concept and plays a central role in both training pilots and designing new aircraft.

The first excavator simulator didn’t arrive until just after Y2k. Other heavy equipment simulators then followed quickly with such training now as firmly established as flight simulation.

After Link, the next pivot in the path to a broader application of VR simulators came in 1966 from Tom Furness, an electrical engineer and Air Force officer. Furness invented the idea of a helmet-mounted heads-up display as a solution to the rising complexity of cockpit instruments. That idea earned him the title of “godfather of virtual reality.” Furness, like Link, went on to create a company, indeed, dozens of them. Most recently he founded the non-profit Virtual World Society to help advance VR as a learning tool for families.[5]Vanfossen, Lorelle. “Virtual Reality Pioneer: Tom Furness.” Educators in VR, May 31, 2019. https://educatorsinvr.com/2019/05/31/virtual-reality-pioneer-tom-furness/ Today, heads-up displays are standard flight equipment, and the U.S. military trains its fleet of drone pilots on machines built by the L-3 Link Simulation & Training division, directly descended from Edwin Link’s innovation.

But until recently, only a sliver of the myriad tasks involved in learning a skill have been amenable to simulation, whether using a wood lathe, welding, plumbing or driving an excavator. In fact, the first excavator simulator didn’t arrive until just after Y2k.[6]“LX6 – Medium Fidelity Simulator Platform – Built for High Throughput Training.” Immersive Technologies – Expect Results. Accessed April 15, 2021. … Continue reading Other heavy equipment simulators then followed quickly with such training now as firmly established as flight simulation.[7]Vara, Jon. “Heavy Equipment Simulators.” JLC Online, February 1, 2012. https://www.jlconline.com/business/employees/heavy-equipment-simulators_o The market for skills simulation and training is, self-evidently, far wider than that for expensive heavy equipment and high-cost aircraft.

The demand for skills is poised to soar not only because of the new skills that will be needed for the new kinds of machines, from warehouse robots and delivery drones, to cobots (collaborative robots) in hospitals, but also because of the so-called “silver tsunami.” The economies of all the developed nations face the unavoidable demographics of the aging of the skilled workforce. The cohort of employees performing skilled tasks skews heavily to those nearer retirement age. This means that, once this group retires, the existing “skills gap” will grow and the demand for simulators to train employees more effectively, quickly and inexpensively will grow as well.

The continual advances in virtual reality have, so far, come at a cost. Link sold his trainers to the Army Air Corps in the 1930s for $65,000 (in today’s inflation-adjusted dollars). Flight simulators now cost from $1 million without motion control to as much as $10 million with full dynamic motion. That may be tolerable for training people how to fly aircraft that cost from $10 to $100 million. But simulators with physical and tactile feedback will need to become cheaper for them to break into other domains—excavators, yes, but also all other kinds of machines, from expensive exoskeletons to remotely operated delivery drones to many classes of semi-autonomous cobots. Lower costs at higher performance are precisely the metrics that the AI, microprocessor and materials revolutions are bringing to simulators.

AR/VR: Over-Promised & Underestimated

In the early days of VR, there was rampant over-promising of what could be achieved, a common phenomenon with new technologies. Facebook famously spent $2 billion to buy the VR company Oculus in 2014, hoping that VR would rapidly enter common usage. It didn’t happen. But now, finally, the three enabling technologies underlying useful VR have reached the necessary collective tipping point.

Realism in VR still begins with the visual. The researchers chasing ever-greater screen resolution, for both large room-scale and tiny eyeglass-size displays, have achieved near life-like pixel densities, with more coming at lower cost. Image generation in real-time gets computationally harder as the pixel density rises. And any image time lag in VR systems has been documented to generate not just a sense of disbelief in the scene, but also fatigue, disorientation and even nausea. That’s being conquered now with superfast image rendering from hyper-performance GPUs. Redolent of how the progenitor of the first cellphone said that Dick Tracy comics inspired that invention, we find Taylor Scott, inspired by the iconic scene of Princess Leia in a holographic display in the 1977 Star Wars movie, unveiling in early 2021 a (prototype) smartphone display that produces a 3D holographic image without special goggles.[8]Greig, Jonathan. “Using ‘Star Wars’ as Inspiration, Hologram Maker Imagines New Future for Smartphones.” TechRepublic, March 16, 2021. … Continue reading

The newest low-cost, high-performance AI engines also play a key role in moving VR systems to the next level. Facebook, not deterred from earlier false steps, has developed an AI-driven system that can dynamically track the user’s eyesight to integrate what’s seen with what’s being heard. This allows the system to replicate our brain’s ability to focus selectively on “hearing” what we are seeing, blocking out the ambient sound of a noisy environment.[9]Dormehl, Luke. “Facebook Is Making AR Glasses That Augment Hearing.” Digital Trends, November 1, 2020. https://www.digitaltrends.com/features/facebook-ar-glasses-deaf/ Conquering that particular feature of VR has been called the “cocktail party challenge.” Solving it will, separately, revolutionize hearing aids. AI engines can also use motion detection combined with cameras to analyze emotional state, puzzlement or attention level. These emotion-sensing technologies (EST) are being added to simulators, but they’re also showing up as driver-assistance tech in automobiles (and other machines for which attention matters greatly).

In order for AR/VR to have the feel of reality, the human–machine interface also has to become more natural in its reception of our “input” commands. In an ideal interface, the machine (or image, or algorithm) should both respond to intentional instructions and intuit our intent. To accomplish the latter, engineers have developed interfaces that “see” our motions and actions to predict an intent. These are either called touch-free systems or intuitive gesture control systems. There are now dozens of such devices—from big companies such as Google and Microsoft, as well as startups. (As has always happened—and is the intended outcome for many entrepreneurs and investors—many of the latter get acquired by the former.)

The concept is not new. Canada’s Gesturetek, for example, provided hands-free video-based input control starting some 30 years ago, used in museums, stores and bars. But it is only in the last five or six years that simple gesture control has matured through the arrival of advanced, tiny and cheap sensors and logic chips. Across the entire applications range, from games and appliances to cars and military machines, the market for gesture-intuitive interface devices is already measured in the tens of billions of dollars.[10]Brandessece Market Research, “Gesture Recognition Market,” April 13, 2020. https://brandessenceresearch.com/PressReleases/gesture-recognition-market-is-expected-to-reach-usd-25551-99-million-by

Some of those control devices are based entirely on cameras or acoustic sensors (microphones) that are already native in smartphones and cars, combined with AI and machine learning to watch, analyze and intuit intent. Some devices also take advantage of the exquisite sensitivity of silicon MEMS (micro-electromechanical systems) microphones that enable detection of both breathing and heartbeat; aside from health monitoring implications, that data can help analyze anxiety or attention. Others, such as Google’s Motion Sense, use tiny, active radar chips to track gestures. And some input devices fuse a combination of or all of the aforementioned sensing modalities.

While we’re quite a way away from a future (despite interesting research into the possibility) in which we can directly read the challenging and “noisy” signals radiating from our brain’s neurons, at least one company has developed a clever wristband that focusesinstead on measuring and interpreting neural activity in your wrist—the messages the brain sends to direct the hands. The latter, developed by the aptly named CTRL-Labs (bought by Facebook in 2019), enables computers to see, interpret and realistically simulate profoundly complex actions, such as playing a piano.

Age of “Vibrotacticle Haptics”

But one of the critical elements still missing from nearly every VR system is physical feedback, particularly tactile feedback. (Link’s flight simulators use electro-hydraulics to simulate bulk motion, as do Disneyland rides.) The idea of a tangible user interface, or a tangible internet, where one can feel images, finds its origins at the MIT Media Lab in 1997.[11]Ishii, Hiroshi. “Tangible Bits.” Proceedings of the 8th international conference on Intelligent user interfaces – IUI ‘03, 2003. https://doi.org/10.1145/604045.604048 It is the last remaining feature needed to bring VR technology one step closer to true realism for many tasks, and what the researchers 20 years ago at Xerox PARC termed the “age of responsive media.”[12]Begole, James. “The Dawn Of The Age Of Responsive Media.” Forbes, January 12, 2016. https://www.forbes.com/sites/valleyvoices/2016/01/12/the-dawn-of-the-age-of-responsive-media/#61d0eca8bce8

[T]echnologies now exists to enable, in the 2020s, hyper-realistic virtual simulators for skills training … [that will] also dramatically improve real-time, human-machine interfaces in heavy industries and service sectors.

In order to virtually sense touch, one needs actuators that replicate—ideally, biomimic—what nerve and muscle cells do. That old dream is realizable now because of the quiet revolution in materials sciences, and the complementary revolution in precision fabrication machines that can make devices out of those novel materials. With electrically reactive polymers and flexible ceramics, the age of “vibrotacticle haptics” is emerging, taking the technology a leap past the familiar vibrating smartphone that has been around for more than a decade. Gloves made from active polymers can serve as both sensor (telling the simulator what your hand is doing) and actuator (providing the sensation of touching a virtual object). And for actions that involve bigger forces, say turning a valve, gloves can have a powered mini-exoskeleton.

As for the more subtle sensing associated with, say, textures, engineers have found ways to program a display’s surface to trick fingers into “feeling” virtual features. By subtle control of electrical forces on the surface of a screen, nerves in fingers can be told to ‘feel’ a bump or feature. Aligning that tactile sense with an image gives the illusion of feeling the texture of the image. This is done by building microscopic conductive layers into displays using the same tools and materials already employed to build the displays.

Such haptics are first targeting making automotive displays safer by allowing the driver to use them by feel. The same technology leads to not only a more reliable control panel or dashboard of switches (since it’s no longer mechanical but virtual), but also a more customizable one that can be easily upgraded.[13]LoPresti, Phillip. “Surface Haptics: A Safer Way for Drivers to Operate Smooth-Surface Controls.” Electronic Design, December 3, 2020. … Continue reading As the technology of hard displays migrates into the technology of flexible, conformal displays, the haptic surface can be wrapped around the shape and contours of objects including, eventually, hands.[14]Park, Sulbin, Byeong-Gwang Shin, Seongwan Jang, and Kyeongwoon Chung. “Three-Dimensional Self-Healable Touch Sensing Artificial Skin Device.” ACS Applied Materials & Interfaces 12, no. 3 … Continue reading Such “artificial skin” is now in a prototype stage analogous to touch screens for phones were circa late 1990s. It wasn’t long after that (2007) that the market-changing iPhone was launched. The 2020s will see tactile-sensing “gloves” that are close to skin-like.

The suite of technologies now exists to enable, in the 2020s, hyper-realistic virtual simulators for skills training for many applications beyond big machines, and also to see such capabilities available remotely. This will permit not only virtual but also online apprenticeship for many skilled trades. It will also dramatically improve real-time, human-machine interfaces in heavy industries and service sectors.

AR/VR: Distinctions & Forecast

Those who are sophisticated in these technologies will notice we have not distinguished, as the engineering community does, amongst the various types of virtual, augmented and mixed-reality systems. There are plenty of gradations between VR and AR, and there are many applications for both beyond skills training and education, including in nearly every aspect of commerce. VR attempts to create an entirely artificial simulation, in many cases a fully immersive environment wherein, for example, a technician or student can undertake a trail run on driving or repairing a machine’s digital simulacrum. AR doesn’t attempt to replicate reality but instead “augments” it by superimposing information and/or images onto reality. A repair technician (or physician) using AR glasses can see what’s inside a machine before “lifting the hood,” or a tourist looking at the Coliseum can see a rendering of what it might’ve looked like in Roman times, with historical information crawling like subtitles below the view.

For AR to break into common business use and everyday wear—to become as ubiquitous as, say, laptops—will require meeting consumers demands in performance, cost and fashion. It is a technological leap that is, in fact, comparable to going from desktops to laptops. But that prospect is now visible in the pre-commercial products emerging from various startups and from bigger tech companies such as Niantic, Facebook, Google and Apple.

Forecasters now see sales of AR/VR devices rising from 1 million units in 2020 to over 20 million by 2025. While businesses will account for 85 percent of those purchases, that’s a similar percentage seen in the early adoption of desktop computers.[15]Needleman, Sarah E. and Jeff Horwitz, “Facebook, Apple and Niantic Bet People Are Ready for Augmented-Reality Glasses,” Wall Street Journal, April 6, 2021. … Continue reading What will subsequently follow is the embedding of AR capabilities into contact lenses. That idea is no longer fanciful but feasible, with notional prototypes using the emerging class of flexible, bio-compatible electronics.[16]Kaplan, Jeremy. “Future of Vision: Augmented Reality Contact Lenses Are Here.” Digital Trends, March 2, 2021. https://www.digitaltrends.com/features/augmented-reality-contact-lenses-vision/

While education, healthcare and advertising are all big magnets for VR and AR—all are also the focus of enormous venture investments—the biggest single locus for VR and AR spending is found in entertainment.[17]“An Introduction to Immersive Technologies.” Vista Equity Partners, August 10, 2020. https://www.vistaequitypartners.com/insights/an-introduction-to-immersive-technologies/ Advances in the entertainment market will, just as they have throughout history, greatly benefit all others. And in particular, in our near future, the challenge of upskilling and training enough people to fill the looming gaps in the great skilled trades.

This article is an excerpt adapted from the book The Cloud Revolution: How the Convergence of New Technologies Will Unleash the Next Economic Boom and a Roaring 2020s. It is reprinted with permission of the author.

Mark Mills is a Manhattan Institute Senior Fellow, a Faculty Fellow in the McCormick School of Engineering at Northwestern University and a cofounding partner at Cottonwood Venture Partners, focused on digital energy technologies. Mills is a regular contributor to Forbes.com and writes for numerous publications, including City Journal, The Wall Street Journal, USA Today and Real Clear. Early in Mills’ career, he was an experimental physicist and development engineer in the fields of microprocessors, fiber optics and missile guidance. Mills served in the White House Science Office under President Ronald Reagan and later co-authored a tech investment newsletter. He is the author of Digital Cathedrals and Work in the Age Robots. In 2016, Mills was awarded the American Energy Society’s Energy Writer of the Year. On October 5, 2021, Encounter Books will publish Mills’ latest book, The Cloud Revolution: How the Convergence of New Technologies Will Unleash the Next Economic Boom and A Roaring 2020s.

References

| ↑1 | Autor, David, and Anna Salomons. “New Frontiers: The Evolving Content and Geography of New Work in the 20th Century.” NBER Economics of Artificial Intelligence, May 2019. https://stuff.mit.edu/people/Autor-Salomons-NewFrontiers.pdf |

|---|---|

| ↑2 | Caballar, Rina Diane. “Programming Without Code: The Rise of No-Code Software Development.” IEEE Spectrum, March 11, 2020. https://spectrum.ieee.org/tech-talk/computing/software/programming-without-code-no-code-software-development. |

| ↑3 | Ziobro, Paul. “UPS Offering Buyouts to Management Workers.” The Wall Street Journal, September 17, 2020. https://www.wsj.com/articles/ups-offering-buyouts-to-management-workers-11600378920 |

| ↑4 | McFadden, Christopher. “The World’s First Commercially Built Flight Simulator: The Link Trainer Blue Box.” Interesting Engineering, August 21, 2018. https://interestingengineering.com/the-worlds-first-commercially-built-flight-simulator-the-link-trainer-blue-box |

| ↑5 | Vanfossen, Lorelle. “Virtual Reality Pioneer: Tom Furness.” Educators in VR, May 31, 2019. https://educatorsinvr.com/2019/05/31/virtual-reality-pioneer-tom-furness/ |

| ↑6 | “LX6 – Medium Fidelity Simulator Platform – Built for High Throughput Training.” Immersive Technologies – Expect Results. Accessed April 15, 2021. https://www.immersivetechnologies.com/products/LX6-Medium-Fidelity-Training-Simulator-for-Surface-Mining.htm |

| ↑7 | Vara, Jon. “Heavy Equipment Simulators.” JLC Online, February 1, 2012. https://www.jlconline.com/business/employees/heavy-equipment-simulators_o |

| ↑8 | Greig, Jonathan. “Using ‘Star Wars’ as Inspiration, Hologram Maker Imagines New Future for Smartphones.” TechRepublic, March 16, 2021. https://www.techrepublic.com/article/using-star-wars-as-inspiration-hologram-maker-imagines-new-future-for-smartphones/ |

| ↑9 | Dormehl, Luke. “Facebook Is Making AR Glasses That Augment Hearing.” Digital Trends, November 1, 2020. https://www.digitaltrends.com/features/facebook-ar-glasses-deaf/ |

| ↑10 | Brandessece Market Research, “Gesture Recognition Market,” April 13, 2020. https://brandessenceresearch.com/PressReleases/gesture-recognition-market-is-expected-to-reach-usd-25551-99-million-by |

| ↑11 | Ishii, Hiroshi. “Tangible Bits.” Proceedings of the 8th international conference on Intelligent user interfaces – IUI ‘03, 2003. https://doi.org/10.1145/604045.604048 |

| ↑12 | Begole, James. “The Dawn Of The Age Of Responsive Media.” Forbes, January 12, 2016. https://www.forbes.com/sites/valleyvoices/2016/01/12/the-dawn-of-the-age-of-responsive-media/#61d0eca8bce8 |

| ↑13 | LoPresti, Phillip. “Surface Haptics: A Safer Way for Drivers to Operate Smooth-Surface Controls.” Electronic Design, December 3, 2020. https://www.electronicdesign.com/markets/automotive/article/21145025/surface-haptics-a-safer-way-for-drivers-to-operate-smoothsurface-controls |

| ↑14 | Park, Sulbin, Byeong-Gwang Shin, Seongwan Jang, and Kyeongwoon Chung. “Three-Dimensional Self-Healable Touch Sensing Artificial Skin Device.” ACS Applied Materials & Interfaces 12, no. 3 (2019): 3953–60. https://doi.org/10.1021/acsami.9b19272 |

| ↑15 | Needleman, Sarah E. and Jeff Horwitz, “Facebook, Apple and Niantic Bet People Are Ready for Augmented-Reality Glasses,” Wall Street Journal, April 6, 2021. https://www.wsj.com/articles/facebook-apple-and-niantic-bet-people-are-ready-for-augmented-reality-glasses-11617713387 |

| ↑16 | Kaplan, Jeremy. “Future of Vision: Augmented Reality Contact Lenses Are Here.” Digital Trends, March 2, 2021. https://www.digitaltrends.com/features/augmented-reality-contact-lenses-vision/ |

| ↑17 | “An Introduction to Immersive Technologies.” Vista Equity Partners, August 10, 2020. https://www.vistaequitypartners.com/insights/an-introduction-to-immersive-technologies/ |